Claude Opus 4.6 vs GPT 5.3 Codex

TL;DR

GPT 5.3 Codex excels at fast execution, agentic coding, and end-to-end workflows, making it a strong choice for building and iterating on real systems quickly. Claude Opus 4.6 stands out in reasoning-heavy, structured, and long-context tasks, especially for data analysis, reporting, and document-driven work. The right choice depends on workflow needs, with Codex favoring speed and iteration, and Opus favoring depth, clarity, and consistency.

Introduction

GPT 5.3 Codex and Claude Opus 4.6 have both just been released, and they show how quickly AI models are moving toward longer, more practical workflows. Instead of focusing solely on answering questions or generating short code snippets, both models are designed to handle real-world tasks that involve planning, iteration, and working across larger contexts.

In this article, I compare GPT-5.3 Codex and Claude Opus 4.6 based on their performance in practice, using two common scenarios: building user interfaces and working with data.

Overview of GPT 5.3 Codex

GPT 5.3 Codex is the latest Codex model from OpenAI, built with a clear focus on execution-oriented developer workflows. Rather than stopping at code generation, the model is designed to handle longer tasks that involve planning, tool usage, and iterative progress across real systems.

A key aspect of GPT 5.3 Codex is its agentic design. It can work directly with terminals, files, and build tools, and continue operating across extended sessions while still allowing developers to guide and adjust the process without losing context. This makes it better suited for workflows where multiple steps and follow-up changes are expected.

Its performance reflects this focus. GPT 5.3 Codex achieves 56.8% on SWE Bench Pro, 77.3% on Terminal Bench 2.0, and 64.7% on OSWorld Verified, which evaluates real computer-based task execution. It also runs about 25% faster than earlier Codex versions and tends to be more token-efficient, which improves responsiveness during longer sessions.

In practice, GPT 5.3 Codex fits well into full-stack development, large-scale debugging and refactoring, infrastructure work, and other scenarios where steady progress and execution reliability matter.

Overview of Claude Opus 4.6

Claude Opus 4.6 is the latest flagship model from Anthropic, positioned as its most capable model for reasoning-heavy work, structured outputs, and long-context tasks. While it continues to support coding and agentic workflows, its core focus is on clarity, consistency, and deep reasoning across complex information.

One of the most notable updates in Opus 4.6 is its long-context capability. The model supports a 200K token context window by default, with a 1M token context window available in beta, and shows significantly improved long-context retrieval. On MRCR v2, a needle-in-a-haystack benchmark, it reaches 76% accuracy, indicating much lower context degradation over extended sessions.

Claude Opus 4.6 also introduces adaptive thinking and configurable effort levels, allowing developers to balance reasoning depth, speed, and cost. In practice, this makes the model more deliberate on complex problems while remaining controllable for simpler tasks. It supports 128K output tokens, which is useful for large reports, analyses, and structured documents.

On evaluations, Opus 4.6 leads on several reasoning and knowledge-work benchmarks, including Terminal-Bench 2.0, Humanity’s Last Exam, and GDPval-AA, where it outperforms GPT-5.2 by roughly 144 Elo points. These strengths make it especially well-suited for analyst workflows, document-heavy research, data analysis, and scenarios where accuracy and consistency matter more than rapid execution.

Task Comparison 1: Coding and UI Development

Let us now compare Claude Opus 4.6 and GPT 5.3 Codex on a simple but representative task: building a basic user interface. The goal here is to observe how each one approaches UI construction, code structure, and iteration when given the same prompt.

Prompt Used for Both Models

Build a simple dashboard UI for a SaaS product with a sidebar, a header, and a main content area showing cards for key metrics.

Use a modern component-based approach, apply sensible default styling, and clearly structure the code so it is easy to iterate on. Do not over optimize.

Be ready to make follow up UI changes.Claude Opus 4.6: UI Build Output

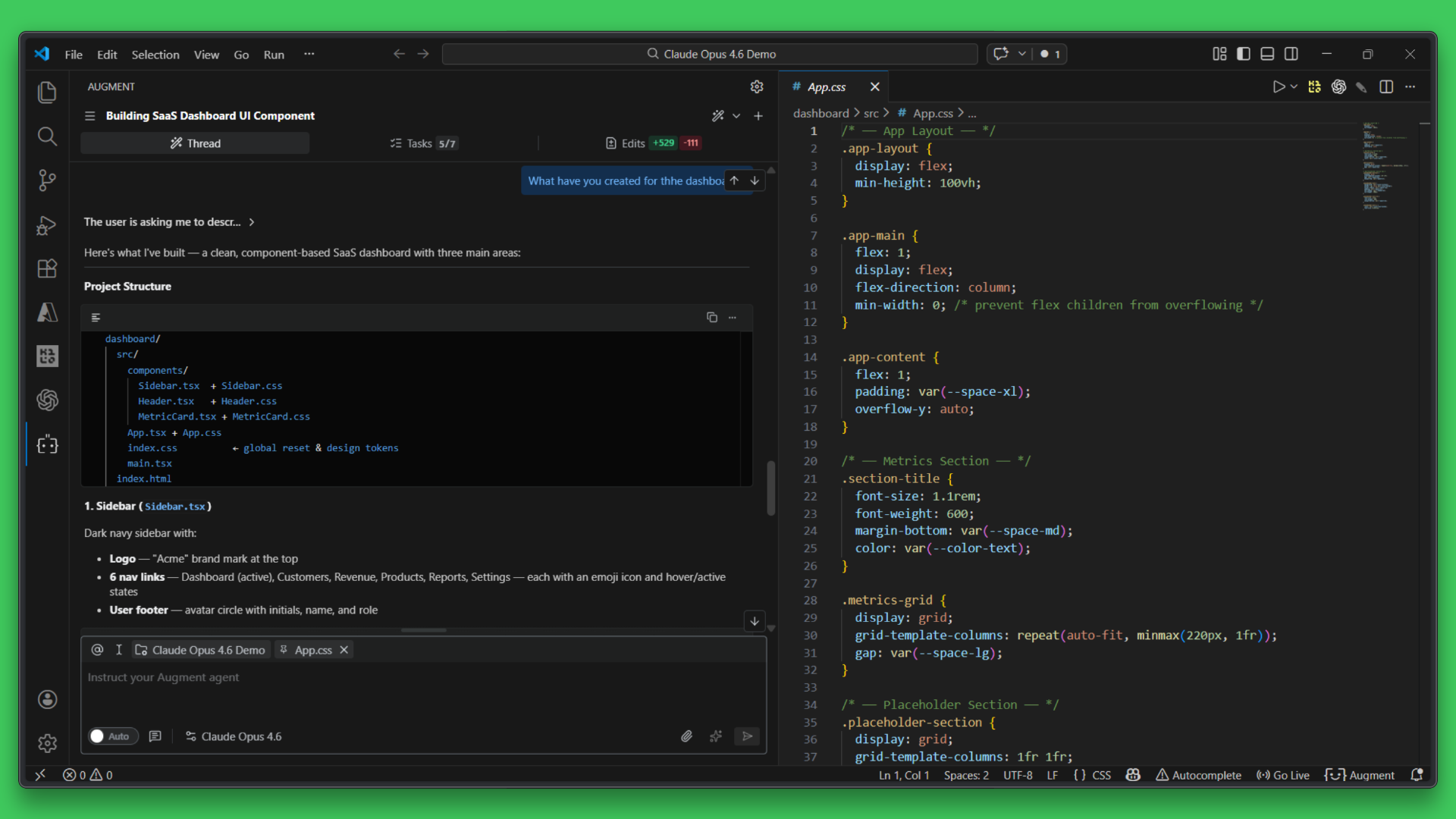

I first ran the prompt on Claude Opus 4.6. The generated source code is shown here.

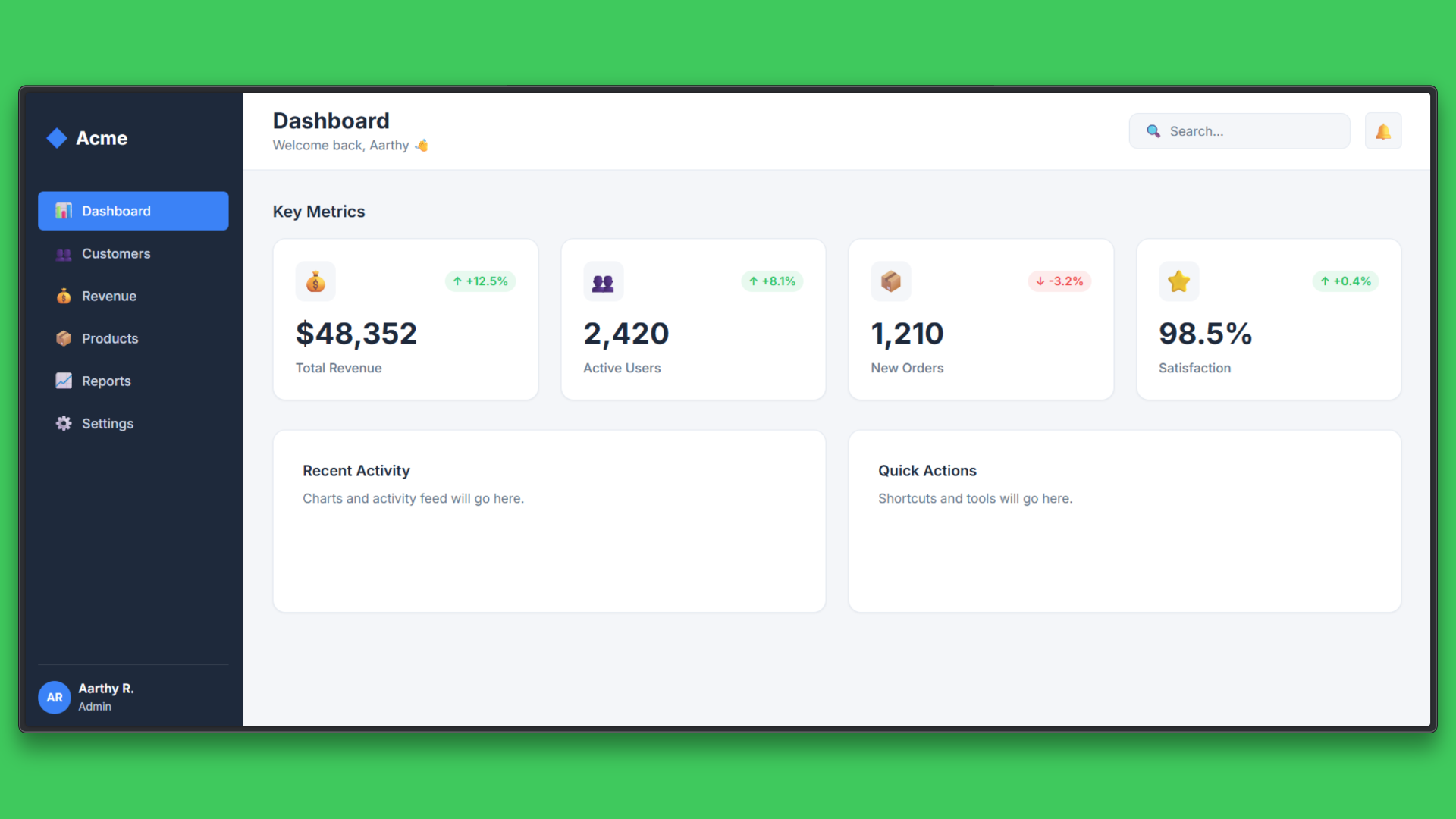

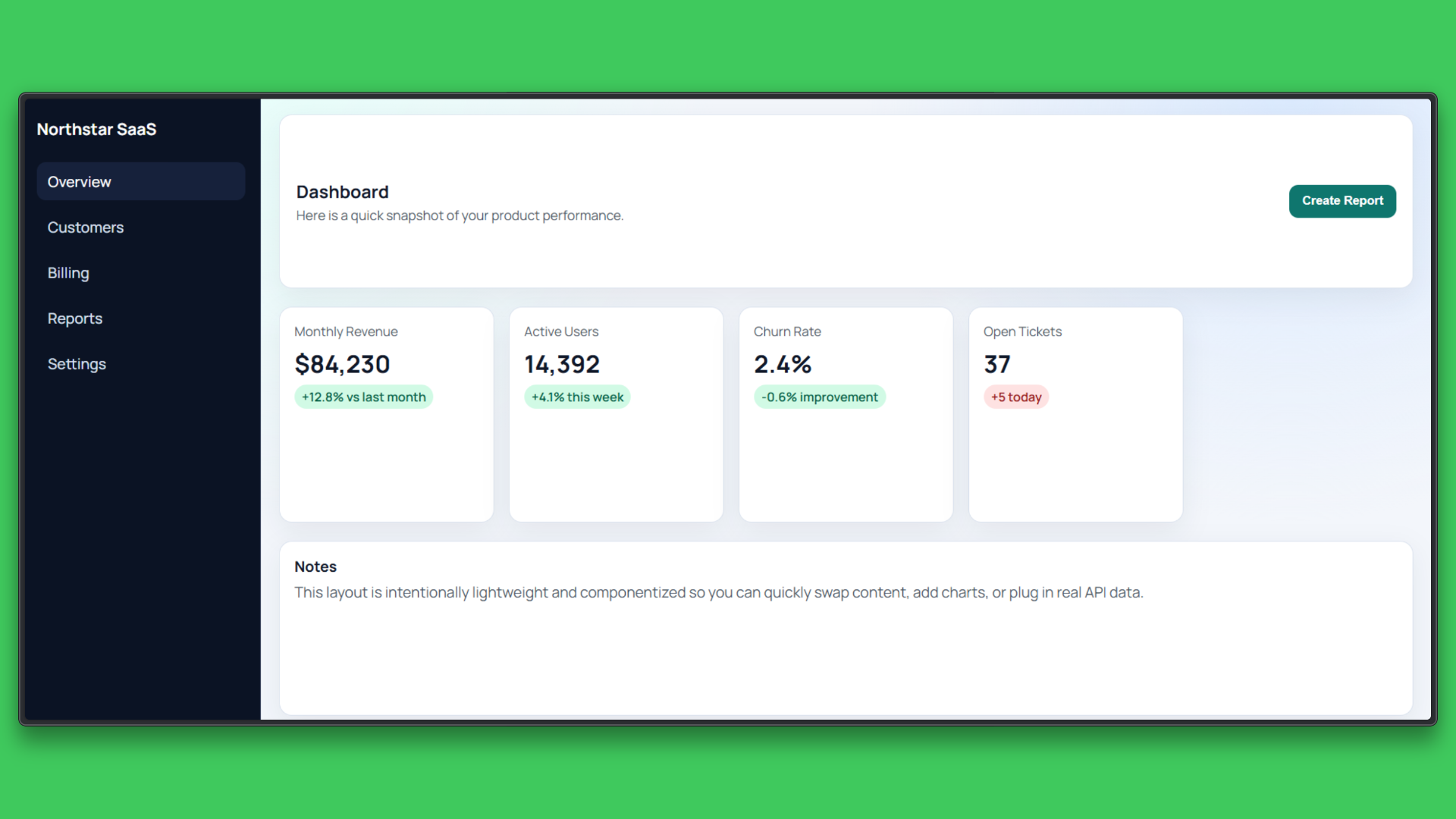

After running the code, the dashboard UI appeared as shown below:

Claude Opus 4.6 generated a dashboard UI using React, with a clear component structure, consistent spacing, and well-organized layout. The task was completed in around 3 minutes, with the model spending more time reasoning about component hierarchy and visual balance before generating code.

The output rendered cleanly on first run and felt deliberate in its design choices. Overall, the model prioritized structure and consistency, resulting in a solid React-based UI foundation.

GPT 5.3 Codex: UI Build Output

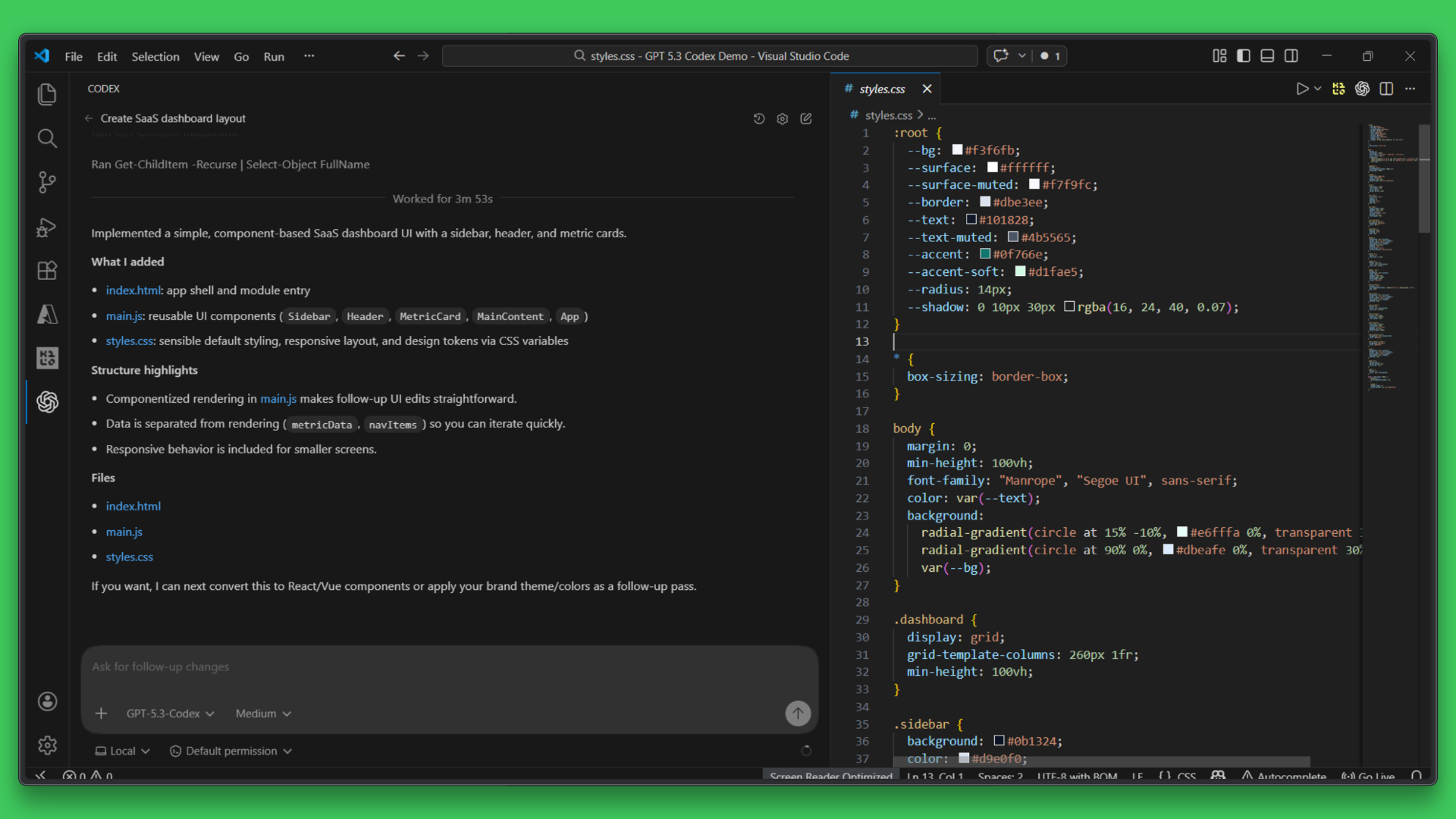

Using the same prompt, I then ran the task on GPT 5.3 Codex. The resulting source code is here.

After running the code, the dashboard UI appeared as shown below:

GPT-5.3 Codex generated a complete dashboard UI from scratch, including layout, styling, and basic component structure. The model produced working HTML and CSS in 3 minutes and 53 seconds, with sensible defaults for spacing, typography, and responsiveness.

The output was immediately runnable and required no manual fixes to render correctly. Overall, the model prioritized getting a functional UI in place quickly, making it easy to iterate further.

Task Comparison 2: Working With Data

Let us now compare Claude Opus 4.6 and GPT 5.3 Codex on a practical data analysis task using real application logs. The aim here is to see how each one explores data, structures its analysis, and delivers insights when working with the same dataset.

This comparison focuses on clarity of results, reliability of analysis, and how efficiently each model moves from raw data to actionable understanding.

Prompt Used for Both Models

> are given an Excel file named **`sample_application_logs.xlsx`** containing one week of application logs. The data includes timestamps, user IDs, request types, response times, and status codes.

>

> 1. Explore the dataset and identify notable patterns or anomalies.

> 2. Calculate and present key metrics such as average response time, error rates by status code, and peak usage periods.

> 3. Summarize performance by request type in a clear table.

> 4. Provide a short written analysis explaining what the data indicates and any potential issues or follow-up actions.

>

> Focus on clarity, correctness, and practical insights. Use structured tables where helpful and avoid unnecessary assumptions.

>Claude Opus 4.6: Data Exploration Task

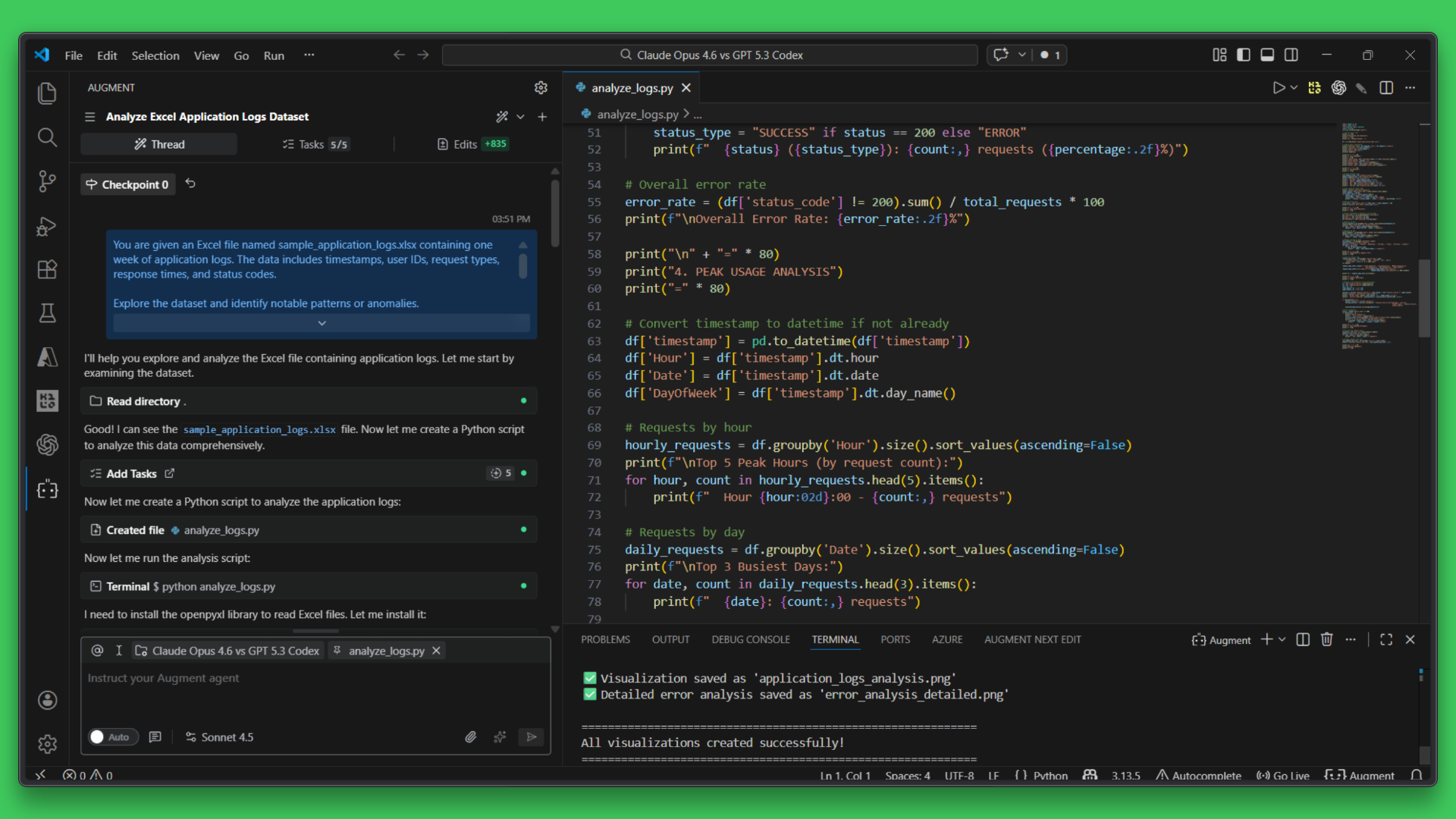

I ran the data exploration task on Claude Opus 4.6 to observe how it approached analysis, structure, and insight generation over a longer workflow.

The final visualization was generated:

Claude Opus 4.6 approached the data task in a structured and methodical way, first inspecting the Excel file and then building a full analysis pipeline around it. Over the course of around 8 minutes, it created and iterated on Python scripts to load the data, calculate metrics, and handle issues such as missing dependencies and column mismatches.

The model went beyond basic analysis by producing written reports, summary tables, and visualizations, demonstrating strong ownership of the end-to-end workflow. Overall, the result emphasized clarity and completeness, even when the task required multiple steps and adjustments.

GPT 5.3 Codex: Data Exploration Task

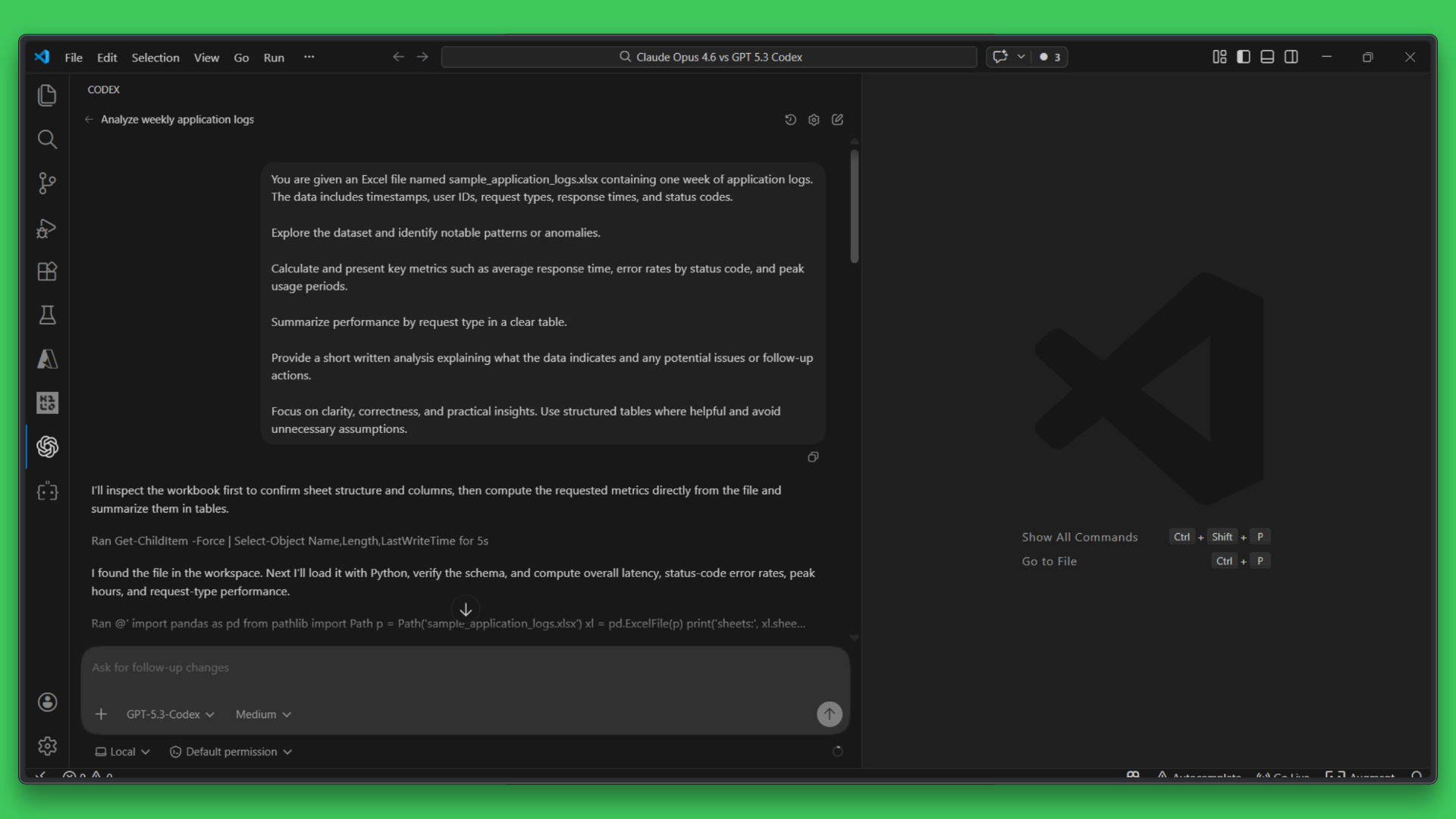

Using the same dataset and prompt, I ran the data exploration task on GPT 5.3 Codex to evaluate how quickly it extracted key metrics and summarized insights from the data.

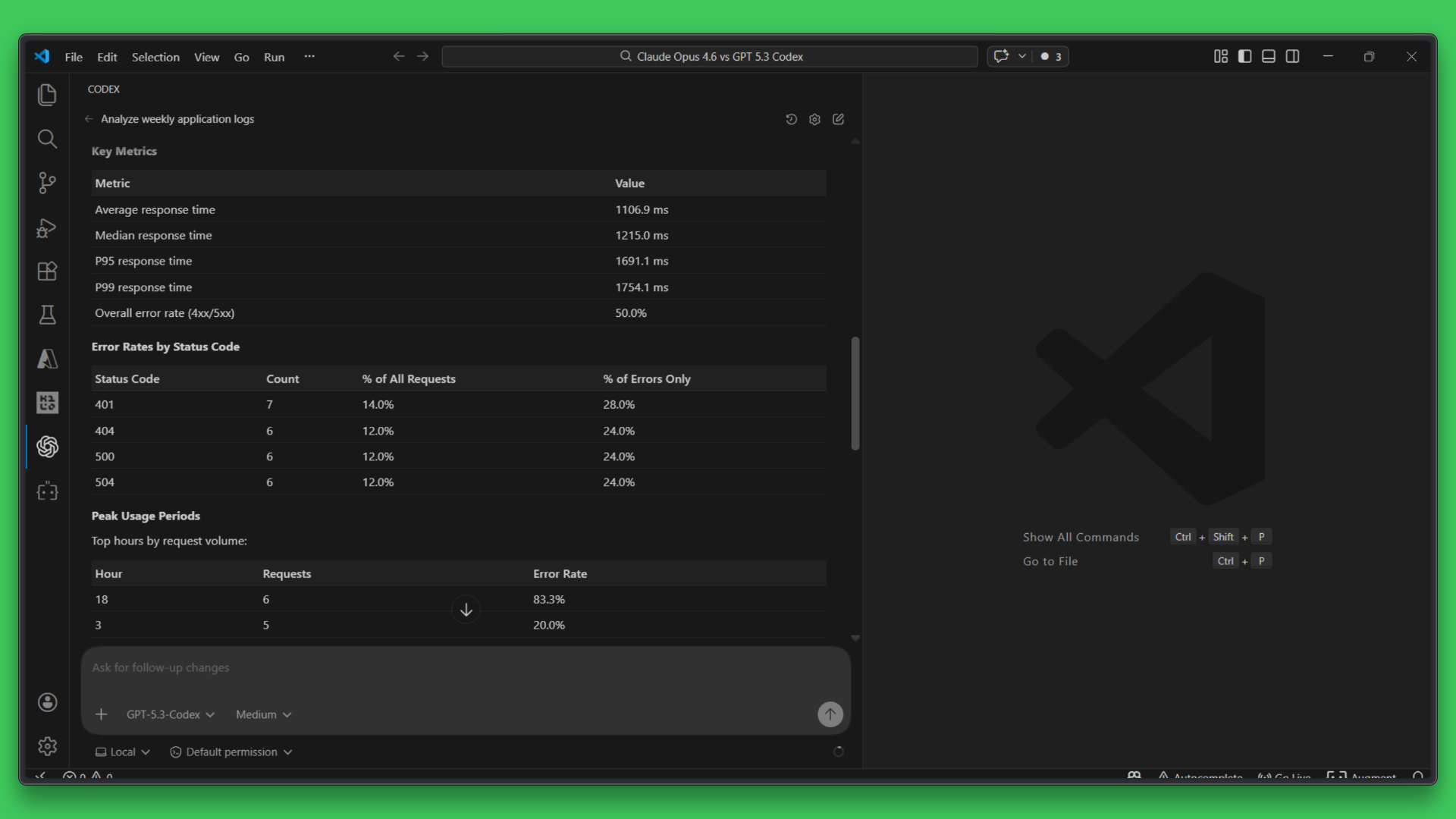

The final table for visualization was generated:

GPT-5.3 Codex completed the data analysis task in around 1 minute and 35 seconds, producing a clear, structured summary directly from the Excel file. Instead of building a full scripting pipeline, it focused on extracting key metrics, error distributions, and peak usage periods in a concise, readable format.

The results were immediately usable, with well-organized tables and straightforward insights that required no additional setup. Overall, Codex prioritized speed and direct answers, making it well-suited for quick analysis and fast iteration when time to insight matters most.

Comparison on GPT 5.3 Codex & Opus 4.6

Key Takeaways and Final Verdict

For developers choosing between the two, the decision comes down to workflow. If you are building features, iterating on code, or working across tools end to end, GPT 5.3 Codex is a strong fit. If your work involves deep analysis, large documents, or careful reasoning over data, Claude Opus 4.6 is likely the better choice. Rather than a single winner, these models represent different trade-offs between execution speed and analytical depth.

If you’re building real AI systems that need reliable data ingestion, document parsing, and scalable agent workflows, Tensorlake provides a unified solution. Whether you’re working with PDFs, spreadsheets, or slides, Tensorlake helps you build scalable, production-ready pipelines that connect seamlessly with your AI models and automation workflows.

Related articles

Get server-less runtime for agents and data ingestion

Tensorlake is the Agentic Compute Runtime the durable serverless platform that runs Agents at scale.