Gemini 3 vs GPT-5.2: Detailed Coding Comparison

By the end of 2025, two major AI releases had reshaped how developers build and ship software: OpenAI’s GPT-5.2 and Google’s Gemini 3 Pro. Both bring improvements in reasoning, coding assistance, and multimodal capabilities, but they take different approaches to solving developer problems.

Gemini 3 Pro was officially launched on November 18, 2025, as Google’s most advanced multimodal model yet, designed to handle complex text, image, audio, and video tasks alongside code and reasoning.

Long-Term Memory: Episodic: Stores specific past events or "episodes" of interactions (e.g., "The user mentioned they preferred Python over Java last week"). Semantic: A repository of general facts, world knowledge, and entity relationships (e.g., "Paris is the capital of France"). Procedural: Contains the skills and "how-to" logic for performing tasks, often encoded as system prompts, scripts, or specific tool-calling protocols.

A few weeks later, GPT-5.2 was released on December 11, 2025, with a focus on predictable reasoning, strong code generation, and reliable long-context performance. It’s built to support workflows where accuracy and consistency matter most.

Because each model excels in different areas, the best choice often depends on the type of project you are building. To make that clearer, this article compares both models and evaluates them through a set of practical coding challenges to see how they perform in real development scenarios.

Comparing GPT-5.2 and Gemini 3 Pro

Both models are fully multimodal and both support tool use, reasoning chains, and long-context inputs large enough to handle full repositories or multi-service architectures. They are built with safety controls and enterprise features, but they lean in different directions.

Gemini 3 Pro fits naturally into Google’s ecosystem, with tighter integrations across Search, YouTube, and media-centric workflows. It tends to feel more “creative” out of the box, especially for interactive or multimodal builds.

GPT-5.2, on the other hand, focuses on predictable reasoning and developer-grade code generation. Its step-by-step “Thinking” mode makes debugging, refactoring, and architecture planning straightforward, and its ChatGPT interface remains one of the most polished for technical work.

Benchmark Breakdown

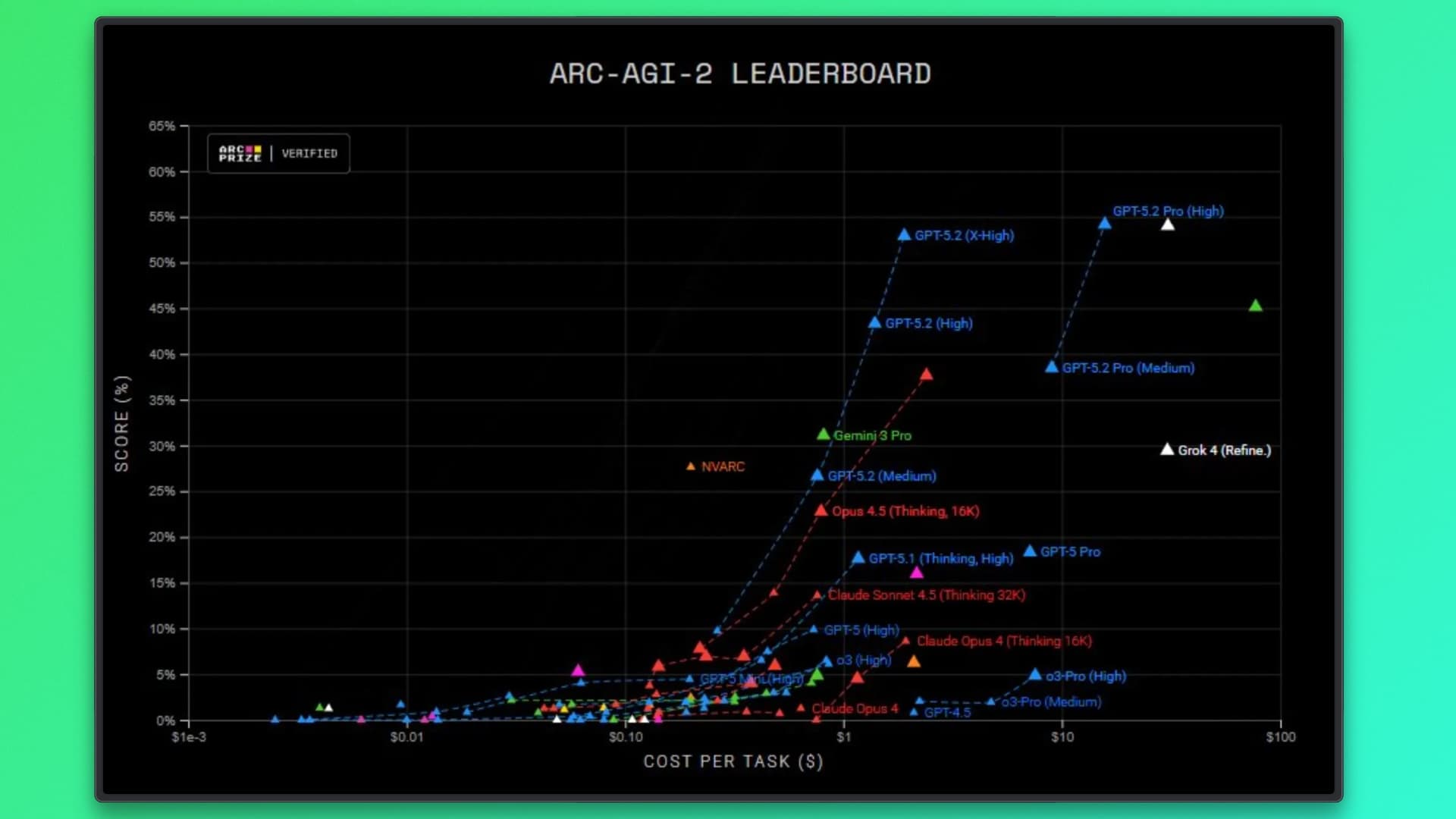

Using numbers reported at launch, GPT-5.2 generally leads in structured reasoning and software engineering, while Gemini 3 Pro maintains an edge in multimodal depth.

Below is a streamlined comparison:

Overall, GPT-5.2 pulls ahead on reasoning-heavy and engineering-focused benchmarks, making it stronger for complex coding workflows. Gemini 3 Pro counters with better multimodal depth, which shows up in tasks involving images, audio, and mixed-media reasoning.

TL;DR

- GPT-5.2 consistently produced solutions that felt complete, polished, and ready for real use. Its outputs included stronger UI design, smoother interactions, and thoughtful features that went beyond the basic prompt.

- Gemini 3 Pro implemented the core logic across all challenges, but the results were simpler. Interfaces were limited, customization options were minimal, and key usability features were often missing.

- The ARC-AGI-2 leaderboard reflects this same pattern at scale, showing GPT-5.2 with a clear advantage in reasoning-heavy tasks and overall reliability.

- Based on both the coding challenges and broader benchmarks, GPT-5.2 currently offers a better experience for developers who need high-quality, production-oriented code.

Why Coding Challenges?

Benchmarks show how a model performs in isolation, but coding challenges reveal how it behaves when you are actually building, debugging, structuring logic, handling state, and producing code that runs without fuss. They highlight the practical differences that matter in day-to-day development.

So let’s put both models to work and see how they handle real coding scenarios.

Challenge 1: Build a Music Visualizer Using Audio Frequency Data

Create a browser-based music visualizer that analyzes live audio frequency data using the Web Audio API and renders a real-time visualization on an HTML canvas. The visualizer should react dynamically to changes in amplitude and frequency, run smoothly at 60fps, and use efficient data processing suitable for continuous playback.

Gemini 3 Pro Response:

You can find the generated code here.

Output:

ChatGPT 5.2 Response

You can find the generated code here

Output:

Outcome Summary:

ChatGPT 5.2 delivered a more polished and feature-rich visualizer, with a better UI, customization options, and support for uploading and downloading audio files.

Gemini 3 Pro produced a functional visualizer but with a plain UI, limited interactivity, and no option to upload existing audio. ChatGPT 5.2 clearly provided the stronger solution in both design and functionality.

Challenge 2: Collaborative Markdown Editor With Live Preview

Build a web-based Markdown editor that supports real-time collaboration between multiple users. The interface should include a split-pane layout with a text editor on the left and a live preview on the right. As users type, the preview should update instantly without lag. Use WebSockets or a CRDT-based sync layer to merge edits safely, avoid conflicts, and keep all clients in sync. Add basic formatting shortcuts, keyboard navigation, and a clean UI suitable for embedding into a developer tool.

Gemini 3 Pro Response:

You can find the generated code in this repo.

Output:

ChatGPT 5.2 Response

You can find the generated code here.

Output:

Outcome Summary:

Both models produced functional editors that met the core requirements, but ChatGPT 5.2 handled the collaborative aspect more effectively. It added features such as customizable environment names and shareable invite links, making the collaboration experience feel complete.

Gemini 3 Pro implemented real-time syncing but did not present a clear collaborative environment, resulting in a less cohesive experience. ChatGPT 5.2 ultimately delivered the stronger solution for this challenge.

Challenge 3: WebAssembly Image Filter Engine

Create a browser-friendly image processing engine powered by WebAssembly. Write core filters—grayscale, blur, sharpen, and invert—in C++, compile them to WASM, and expose the functions to JavaScript. The app should allow users to upload an image, apply filters with minimal latency, and preview the results instantly. Focus on efficient memory handling between WASM and JS, and design the engine so additional filters can be added without refactoring the entire pipeline.

Gemini 3 Pro Response:

You can find the generated code here.

Output:

ChatGPT 5.2 Response

You can find the generated code here

Output:

Outcome Summary:

Both models successfully delivered a working WebAssembly image filter engine, but ChatGPT 5.2 provided a noticeably more refined solution. It offered multiple filter controls, adjustable blur strength, an easy way to revert to the original image, and thoughtful handling of edge cases.

Gemini 3 Pro produced functional filters, but the interface was limited, lacked fine-grained controls, and did not support combining multiple filters smoothly. Gemini accomplished the core task, but ChatGPT 5.2 delivered a far more complete, user-friendly, and flexible implementation.

Final Verdict

Across all three challenges, GPT-5.2 consistently delivered more complete, polished, and developer-ready solutions. Its interfaces were cleaner, its interactions smoother, and its features aligned closely with what real users expect. Gemini 3 Pro produced functional results, but they lacked refinement and felt limited in flexibility and usability.

GPT-5.2 did not just finish the tasks. It improved them in ways that showed a deeper understanding of real development work, while Gemini 3 Pro focused mainly on core functionality.

As the tweet sums up perfectly, the gap is not closing. It is widening. At this point, GPT-5.2 stands out as the more reliable and capable choice for practical coding tasks.

Related articles

Get server-less runtime for agents and data ingestion

Tensorlake is the Agentic Compute Runtime the durable serverless platform that runs Agents at scale.