OpenCode: The Best Claude Code Alternative

Most developers today already use AI while writing code. It is common to ask an agent to scan a repository, refactor a service, or help track down a bug instead of handling everything manually. For many teams, these tools are now part of the daily workflow.

Claude Code gained popularity for good reason. It works well in the terminal, handles large codebases reliably, and performs consistently on multiple file changes. For many developers, it was the first AI agent that felt dependable enough for real production work.

As usage increased, some practical concerns started to surface. Frequent agent use makes pricing more noticeable. Limited control over model selection and routing matters when costs or performance vary by task. The tool’s managed design limits visibility into its internal agent logic and restricts how deeply teams can adapt it over time.

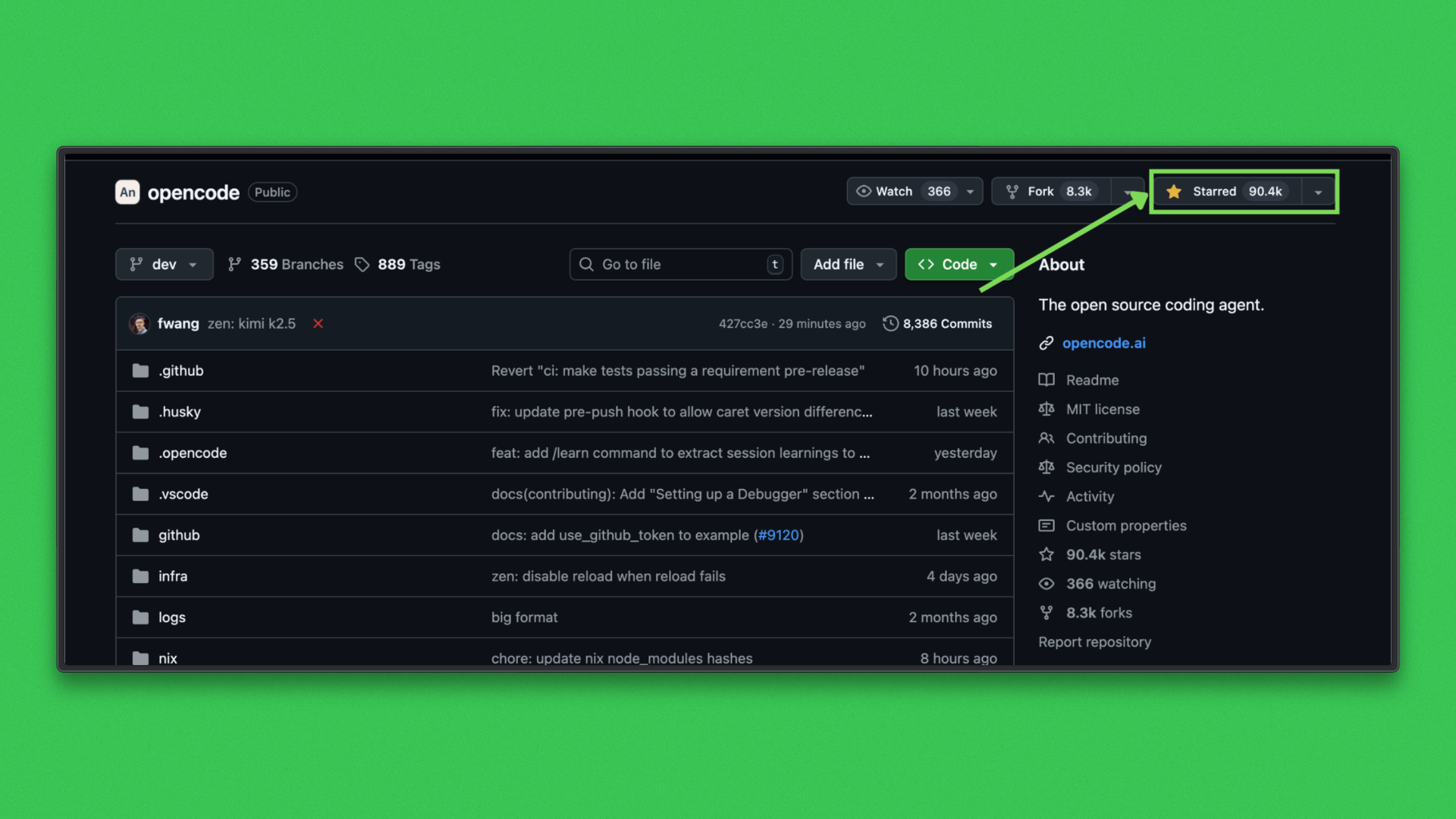

Interest in open source alternatives has grown alongside these concerns. OpenCode currently has 90.4k GitHub stars, ranking it among the most-followed developer tools in this category. That level of attention reflects steady adoption across individual developers and teams.

What Claude Code Gets Right and Why It Sets the Bar

Claude Code built its reputation by performing reliably on complex, real-world development tasks. It handles large codebases, long context, and multi-step changes with a level of consistency that many tools in this category struggle to reach.

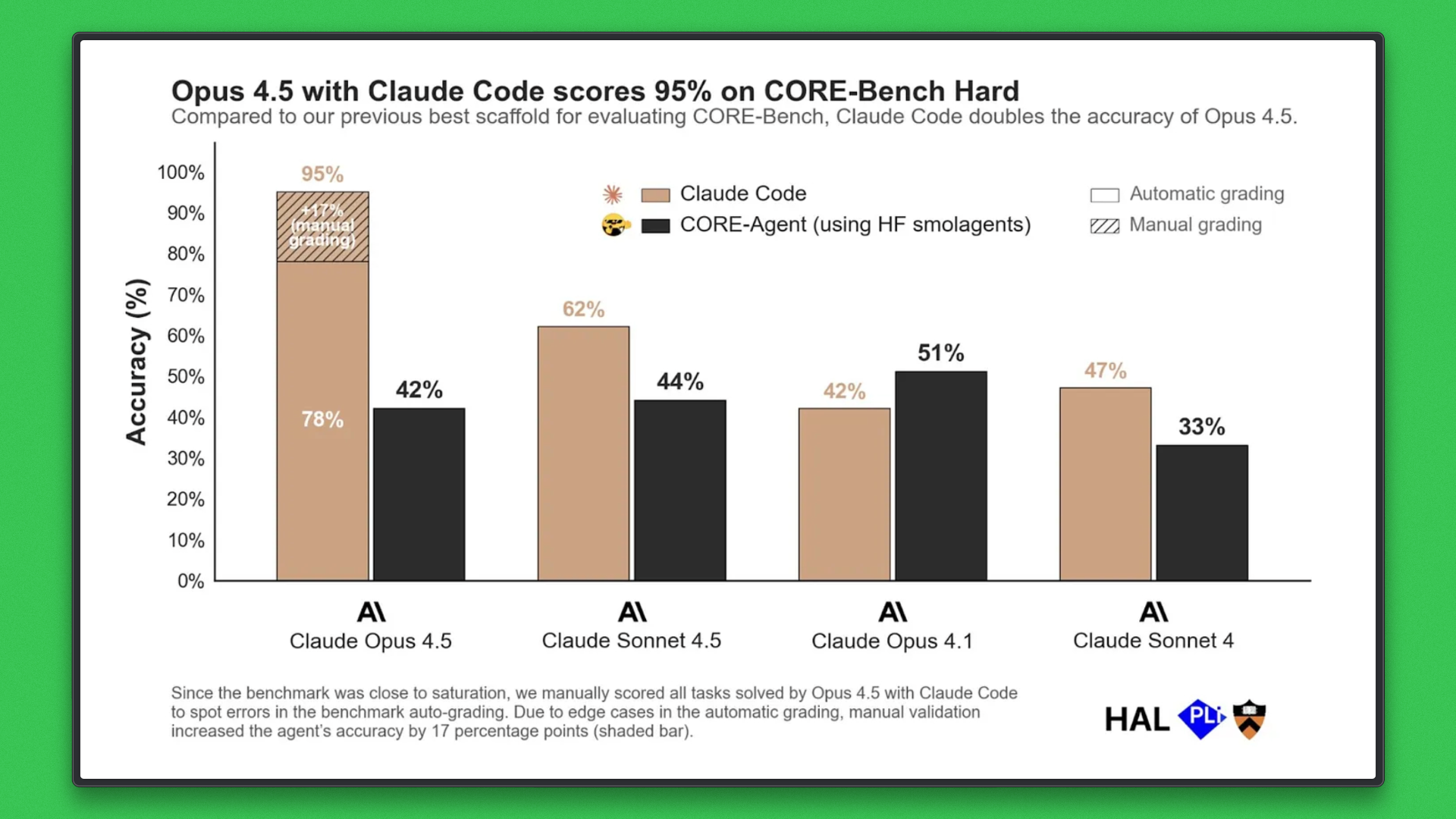

A major reason for that consistency is the model stack behind it. Claude Code is optimized around Anthropic’s Claude models, including Claude Opus 4.5, Claude Sonnet 4.5, Claude Opus 4.1, and Claude Sonnet 4. These models are designed for deep reasoning and extended context, which becomes especially important when an agent is working across many files.

The benchmark results reinforce this. When paired with Claude Code, Claude Opus 4.5 achieved 95% accuracy on CORE Bench Hard. Even without manual validation, the same setup reached 78%, with manual review contributing an additional 17% by catching edge cases missed by automated grading. Other agent frameworks using similar models typically landed in the low 40% range.

The Core Problem With Claude Code

The same tight integration that helps Claude Code perform so well also defines its main limitations. Claude Code is designed as a managed and opinionated system that prioritizes reliability and strong defaults. This approach works well initially, but can become constraining as teams scale usage or require deeper control.

- Managed model integration: Claude Code is optimized around the Claude model family and officially supports configuration across multiple Claude variants. It can also be run against local or third-party models using Anthropic-compatible providers, but this relies on compatibility layers rather than first-class, provider-agnostic support. Model routing and optimization remain largely abstracted away from the developer.

- Pricing and cost control: Claude Code primarily operates on a subscription model, which simplifies budgeting for predictable usage. Although free or low-cost setups are possible by routing requests through local models, these configurations sit outside the core product experience and require additional external tooling and monitoring. Native mechanisms for per-task cost optimization are limited.

- Limited agent customization: The agent provides strong built-in planning and execution flows, but offers limited options for deeply customizing how tasks are planned, executed, or validated. Teams often need to adapt their workflows to the agent rather than tailoring the agent to their workflows.

- Constrained extensibility: Claude Code does not expose its internal agent loop as open source. While integrations are possible at the tooling level, extending, auditing, or modifying the agent’s core logic is not straightforward.

- Centralized product direction: Model availability, supported workflows, and feature evolution are primarily shaped by a single vendor roadmap. This enables polish and cohesion, but reduces long-term flexibility for teams that want deeper ownership over their tooling.

These constraints are subtle at first. They become more apparent once Claude Code is embedded deeply into daily development workflows and used across a wide range of tasks.

Introducing OpenCode: An Open Source CLI Coding Agent

Those limitations in Claude Code raise a clear question. How can teams maintain the same level of capability while gaining greater control over models, cost, and long-term ownership?

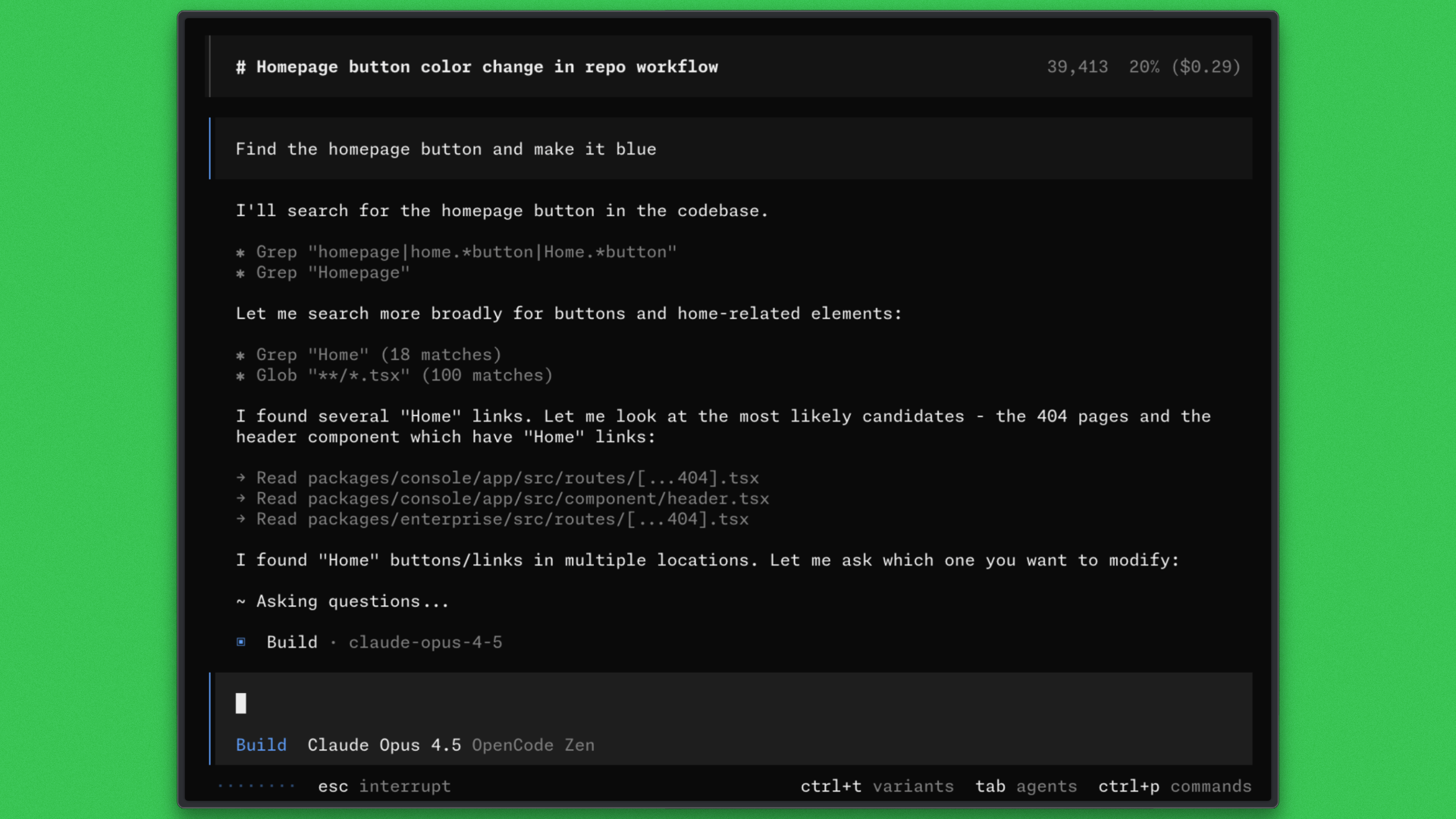

OpenCode addresses this by approaching the coding agent as foundational tooling. It runs directly in the terminal, works with the filesystem and shell, and fits into existing workflows without disrupting how developers already build software.

OpenCode supports a wide range of options, including Claude models, GPT-4 class models, Google Gemini, and local models through providers such as Ollama. This flexibility allows teams to adjust performance and cost based on the task at hand.

Because OpenCode is fully open source, its internal behavior is visible and extensible. Teams can review how the agent works, connect internal tools, and adapt it as requirements change, without being tied to a single vendor roadmap.

Getting started with the CLI is straightforward:

npm i -g opencode-aiOpenCode focuses on flexibility and long-term control while remaining capable enough for serious development work.

Why Developers Are Sticking With OpenCode

The strongest signal behind OpenCode’s growth is not attention, but continued use across different teams and workflows.

- Active developer involvement: OpenCode has more than 640 contributors and supports around 1.5 million developers per month, indicating sustained engagement from both individual developers and teams.

- Works across existing environments: OpenCode can be used from the terminal, inside IDEs, or through a desktop application, allowing teams to adopt it without changing how they already work.

- Agent features that support real workflows: The agent automatically loads relevant language servers, supports multiple parallel sessions on the same project, and allows sessions to be shared for review or debugging.

- Integration with familiar tooling: Developers can connect OpenCode to existing tools such as GitHub Copilot accounts, reducing setup friction and making it easier to fit into established workflows.

- Broad model support by design: OpenCode works with more than 75 model providers, including local models, which gives teams flexibility around cost, performance, and deployment choices.

Feature by Feature Comparison

The differences between Claude Code and OpenCode become clearer when looking at how common development needs are handled in practice. The table below focuses on behavior, control, and extensibility rather than surface-level features.

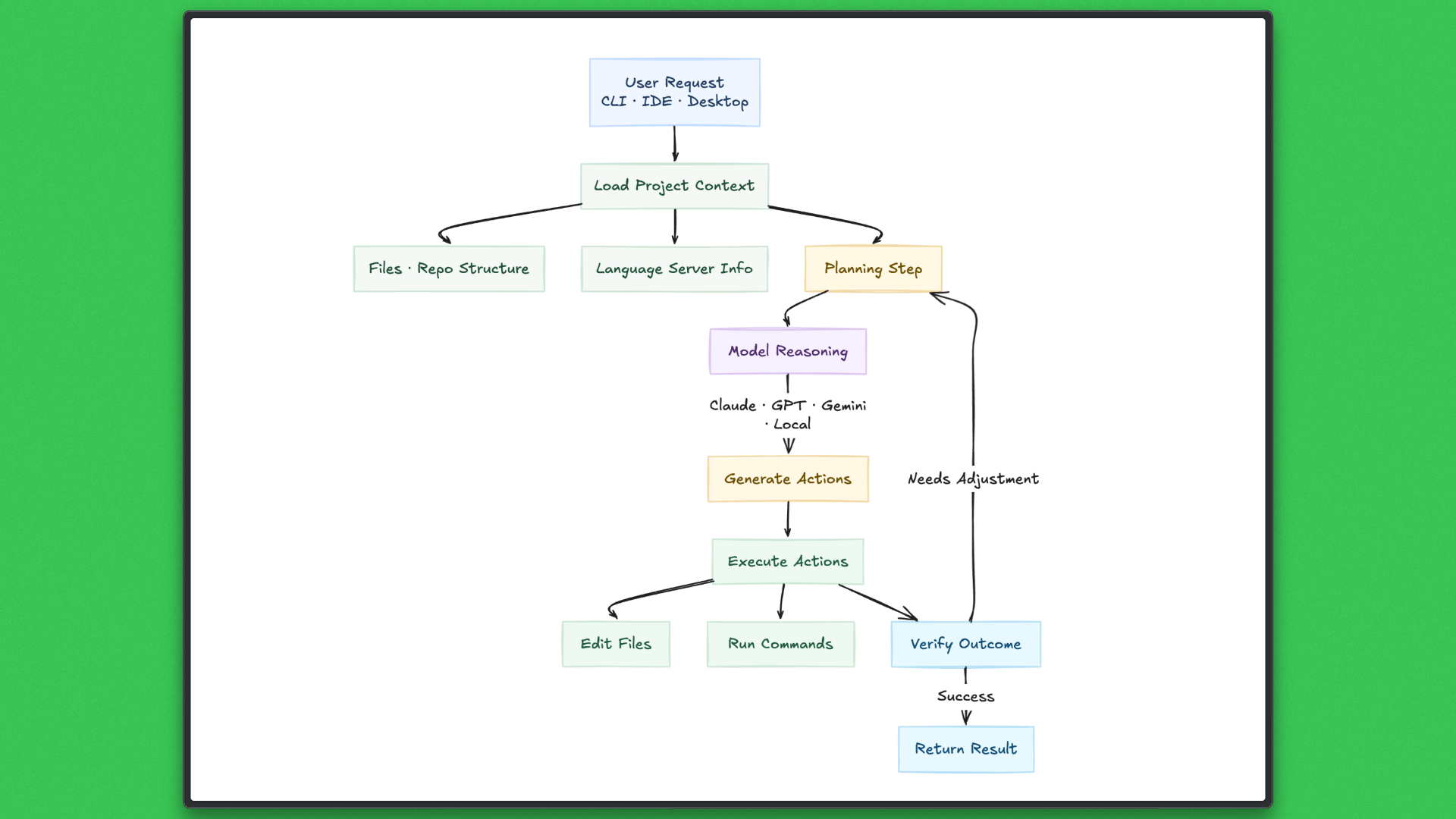

Architecture Deep Dive: How OpenCode Works

The way OpenCode behaves in practice is a direct result of its agent's design. Most of the flexibility developers notice comes from architectural choices made early, not from surface-level features.

Execution happens through direct interaction with the filesystem and shell. OpenCode runs commands, edits files, and inspects outputs using the same mechanisms developers use themselves. This keeps behavior predictable and avoids hidden abstractions.

Context handling is another central part of the design. OpenCode loads project structure, language server information, and recent changes so the agent can work across multiple files without losing track of earlier steps. This matters most during longer sessions where tasks span many iterations.

Finally, the agent logic is decoupled from the model provider. Model calls are interchangeable components, which allows OpenCode to adapt to different models without changing how it plans or executes work. This separation is what enables flexibility across environments and workloads.

Head-to-Head: OpenCode vs Claude Code on Real-World Tasks

So far, the comparison has focused on design. To see how those choices play out, it helps to compare how both tools handle the same real development tasks.

The goal is to look beyond speed or polish. Both OpenCode and Claude Code are capable. What matters is how they work, how much control they give the developer, and how they behave once tasks involve real context and multiple steps.

The following examples focus on workflow and decision-making during the task.

Task 1: Large-Scale Multi-File Refactoring

Task:

Refactor an existing service that spans multiple files by reorganizing modules, updating shared interfaces, and resolving resulting breakages.

Goal:

Evaluate how each agent understands project structure, sequences dependent changes, and applies updates consistently across a real codebase.

Example setup

For testing, you can use an Express-based service like the repository here, which includes routes, controllers, services, and shared utilities spread across multiple files.

You can use this exact prompt for both OpenCode and Claude Code:

Refactor this project to introduce a domain based structure.

Move all user related logic into src/modules/user/ with separate folders for routes, controllers, and services.

Update all imports accordingly and ensure the application still runs.

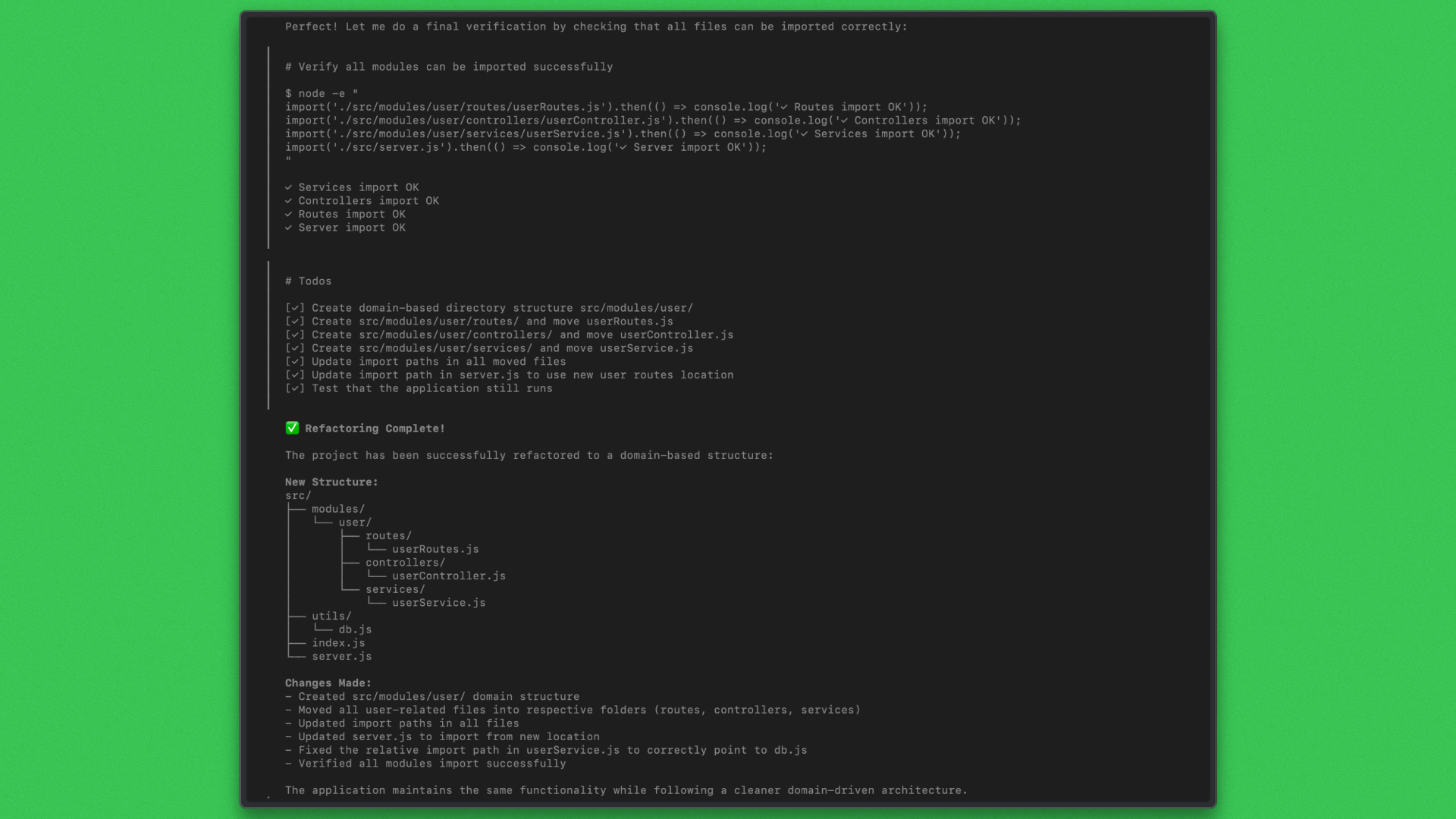

OpenCode’s Response:

ClaudeCode’s Response:

Task 1 Summary:

Both Claude Code and OpenCode successfully completed the multi-file refactor and preserved application behavior. Claude Code followed a structured, step-by-step approach with explicit planning and checks, prioritizing safety and correctness throughout the process.

OpenCode completed the refactor in a more continuous execution flow, relying on direct verification through imports and runtime checks. While both produced correct results, the difference was primarily in workflow style: Claude Code emphasized guarded execution, while OpenCode emphasized control and post-change validation.

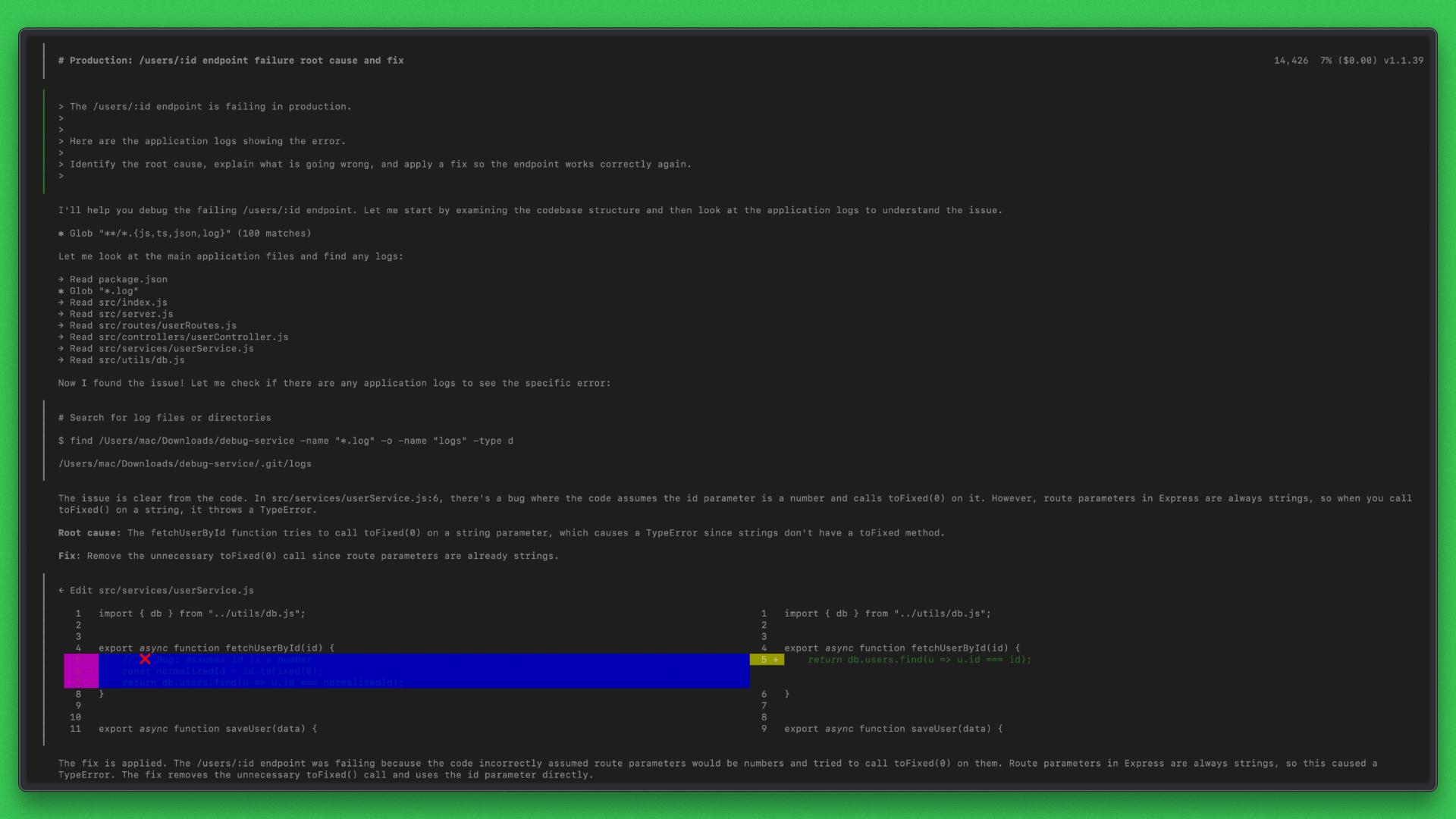

Task 2: Debugging a Production Issue From Logs

Task:

Investigate a failing API endpoint using application logs, identify the root cause, and apply a fix across the affected files.

Goal:

Evaluate how each agent reasons from logs, traces issues through the codebase, and iterates toward a correct fix without losing context.

Example setup

For testing, refer to the same Express-based sample repository used here and introduce a deliberate bug, such as a broken database lookup, incorrect parameter handling, or a missing middleware, and include the corresponding error logs.

You can use this exact prompt for both OpenCode and Claude Code:

The /users/:id endpoint is failing in production.

Here are the application logs showing the error.

Identify the root cause, explain what is going wrong, and apply a fix so the endpoint works correctly again.

OpenCode’s Response:

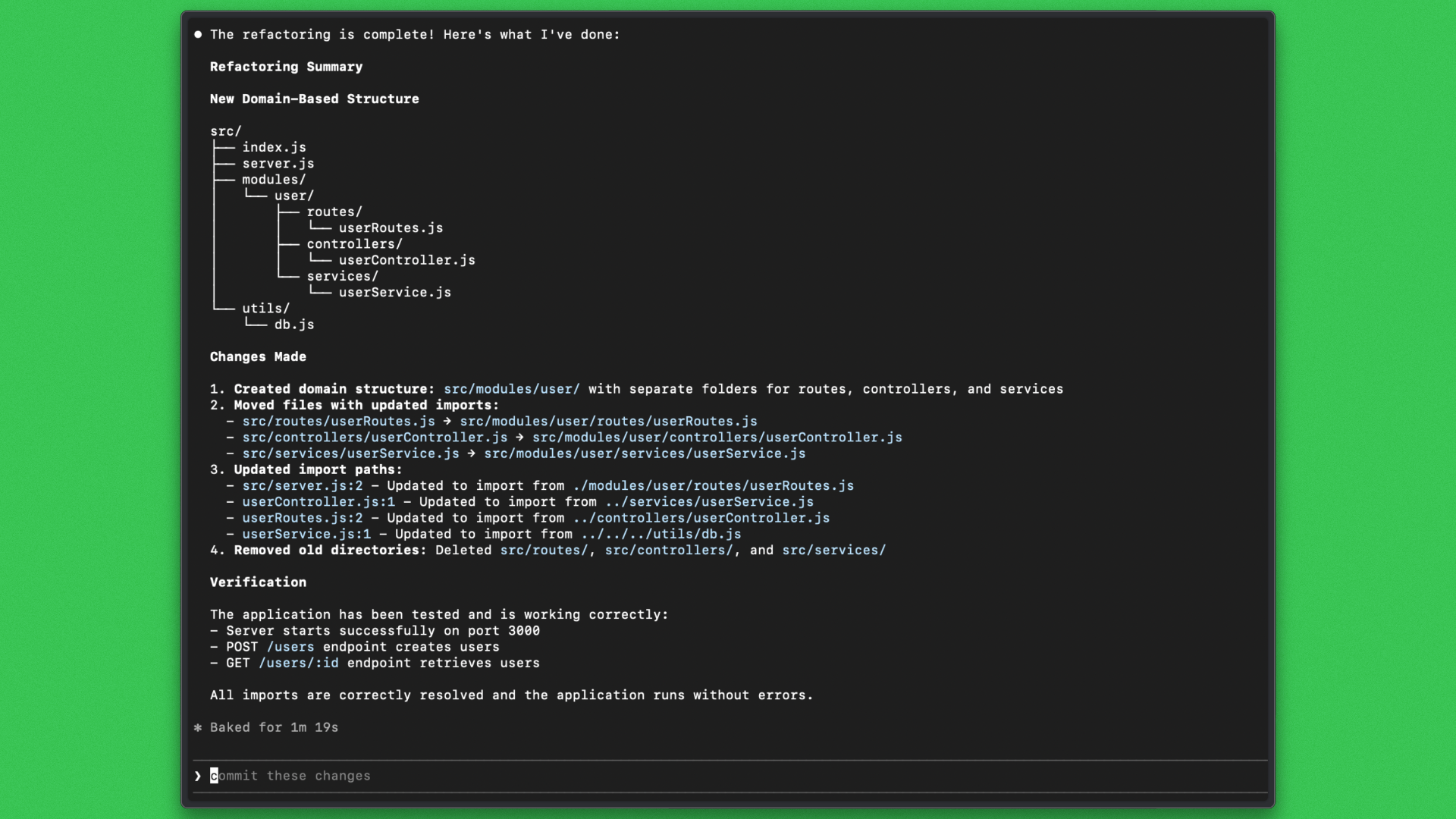

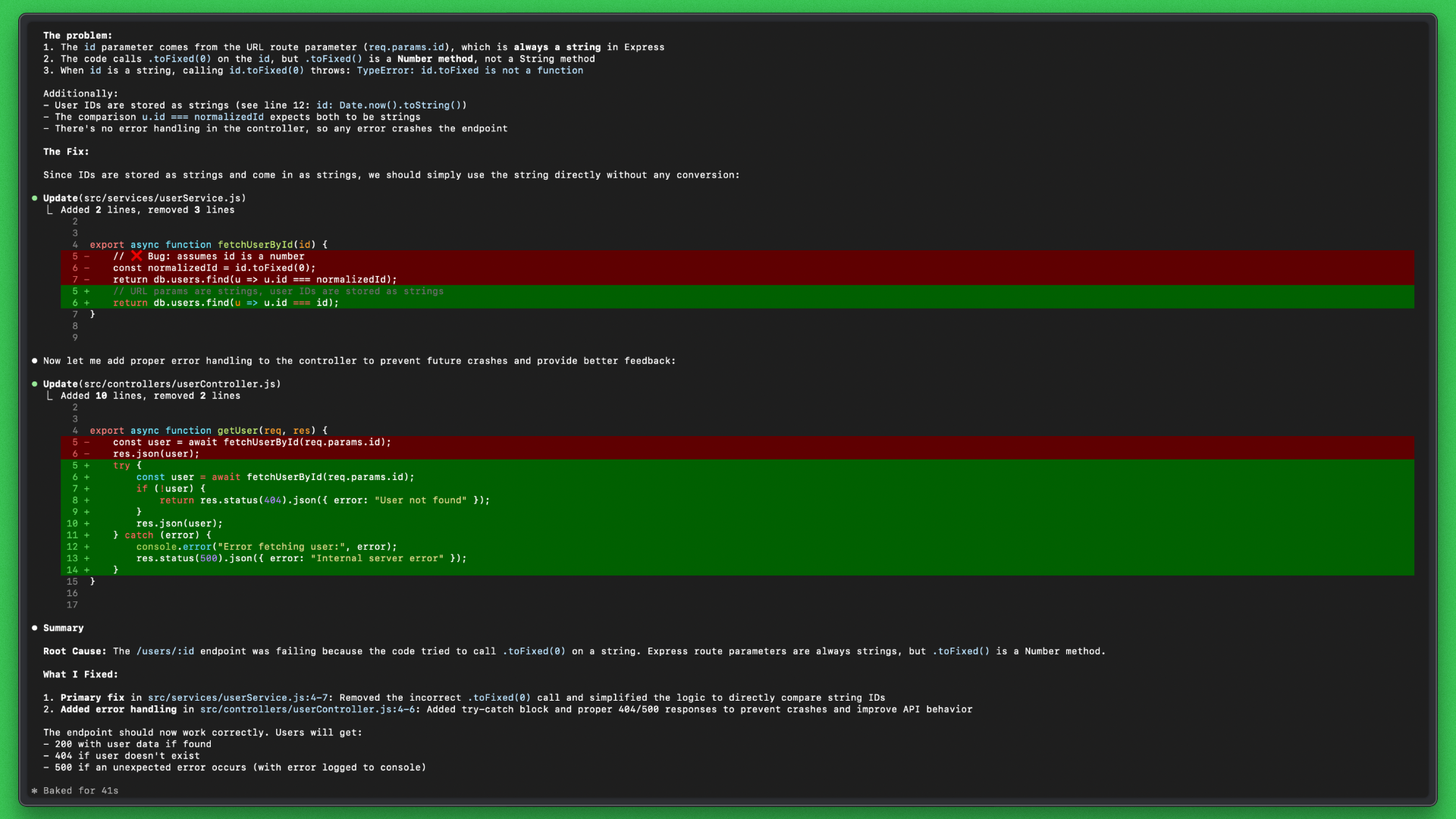

ClaudeCode’s Response:

Task 2 Summary:

Both Claude Code and OpenCode correctly identified the root cause of the production failure by tracing the error from logs to a type mismatch in the service layer. Each tool fixed the issue by removing the invalid numeric conversion and restoring correct string-based ID handling.

The difference was in scope and workflow. Claude Code applied a broader fix by adding defensive error handling to the controller, improving resilience beyond the immediate bug. OpenCode focused on a targeted correction at the source of the failure, keeping the change minimal and contained.

Final Understanding

Both OpenCode and Claude Code handled the refactor and debugging tasks correctly. Each understood the codebase, identified issues, and delivered working results. The difference was not in capability, but in how the work was carried out.

Claude Code followed a structured and guarded flow, with explicit planning and added safety checks. This made its behavior predictable and well-suited for situations where caution and clarity are priorities.

OpenCode took a more direct approach, focusing on the root cause and validating changes through execution. Its workflow felt faster and more continuous, with fewer built-in interruptions. When speed is a priority, OpenCode also allows teams to switch to faster or lighter models, adjusting performance without changing tools.

In practice, the choice comes down to workflow preference. Claude Code favors managed execution. OpenCode favors flexibility and control, including how quickly results are produced

Cost Analysis: Subscription Versus Pay-Per-Use

Once AI agents are used regularly, cost becomes a practical concern rather than a theoretical one. The table below outlines the differences in pricing and token usage between Claude Code and OpenCode.

Claude Code offers predictable pricing through its managed subscription experience, which simplifies cost management for many teams. It can also be configured to run against local models via Anthropic-compatible providers such as Ollama, enabling low-cost or free usage outside the officially supported setup. OpenCode, by contrast, exposes token usage directly, making cost sensitive to model choice, context size, and usage frequency, and giving teams explicit control over how spend scales with workload.

That difference in cost visibility is what makes OpenCode a credible alternative. By separating the tool from the model and exposing real usage costs, OpenCode allows teams to tune performance and spend based on actual workload, which can be more practical over time than relying on a fixed subscription.

Rather than competing on convenience alone, OpenCode positions itself as a flexible foundation for long-term use, where capability is maintained while cost and model decisions remain in the developer’s hands.

When OpenCode Is the Better Choice

OpenCode works best for teams that prioritize flexibility and control over a fully managed experience.

- Teams that run agents frequently and want clear visibility into how usage and cost scale over time, especially when refactoring and debugging are part of daily work.

- Workflows where task complexity varies, making it useful to adjust model choice based on the nature of the change rather than using the same model everywhere.

- Environments with infrastructure, privacy, or compliance requirements that benefit from running agents in controlled setups or using local models.

- Teams that value transparency and want to understand how the agent operates, extend it with internal tools, or align it with existing processes.

- Organizations planning long-term, where reducing dependency on a single vendor and maintaining flexibility around models and pricing is important.

OpenCode requires more deliberate configuration and monitoring. For teams willing to take on that responsibility, it offers a level of control and adaptability that makes it a credible alternative to Claude Code.

Final Verdict

Claude Code offers a smooth and well-managed experience. It works especially well for developers who value strong defaults, minimal setup, and predictable behavior, with most decisions handled by the tool itself.

OpenCode is built for a different set of priorities. It gives teams direct control over model choice, execution environment, and cost, while keeping the core agent logic stable. This makes it easier to adapt as workloads change or as new models and pricing options emerge.

The difference is less about raw capability and more about ownership. Claude Code emphasizes managed simplicity. OpenCode keeps key decisions visible and adjustable.

For teams that rely on AI agents regularly and care about long-term flexibility, OpenCode stands out as a practical and credible alternative to Claude Code.

Related articles

Get server-less runtime for agents and data ingestion

Tensorlake is the Agentic Compute Runtime the durable serverless platform that runs Agents at scale.