The AI Agent Stack in 2026: Frameworks, Runtimes, and Production Tools

TL;DR

TL;DRAI agents are now embedded across software development workflows, supporting coding, testing, debugging, data analysis, and release coordination.Agent behavior and control flow are commonly handled using orchestration frameworks such as LangChain, LangGraph, and Google ADK.Data-intensive and document-driven agents rely on execution and ingestion platforms, such as TensorLake, to support durable workflows and scalable processing.Memory and retrieval systems such as Pinecone, Weaviate, and Zep help agents stay grounded and consistent over time.Observability and evaluation tools, including Weights & Biases, Arize, and TruLens, are essential for monitoring behavior, detecting failures, and maintaining reliability in production.

AI agents are now embedded across modern software development workflows. They assist with writing and reviewing code, generating tests, analyzing logs, coordinating releases, and handling data-heavy tasks. In many teams, these systems operate quietly in the background, supporting developers in everyday engineering work rather than standing out as separate tools.

Recent industry data indicate that more than 97% of organizations are using or evaluating AI in software development workflows, underscoring the extent to which these technologies are embedded in everyday engineering practices.

Instead of interacting with a single assistant, developers increasingly work alongside systems that reason across multiple steps, access tools, and maintain contextual awareness over time.

This article focuses on the categories of tools that support coordination, data access, execution, and monitoring, and explains how they enable AI agents to function reliably in real development environments in 2026.

How AI Agents Became Part of the Everyday Development Workflow

AI agents are increasingly integrated into everyday development work. Many teams now rely on them during planning, where they help break down requirements and draft technical outlines, and during coding, where they assist with boilerplate generation, refactoring, and contextual explanations.

Their use extends into testing, debugging, and data-intensive tasks. Agents generate and validate test cases, analyze logs, summarize incidents, and automate repetitive analysis that previously required manual effort. These capabilities allow teams to move faster while keeping existing workflows largely unchanged.

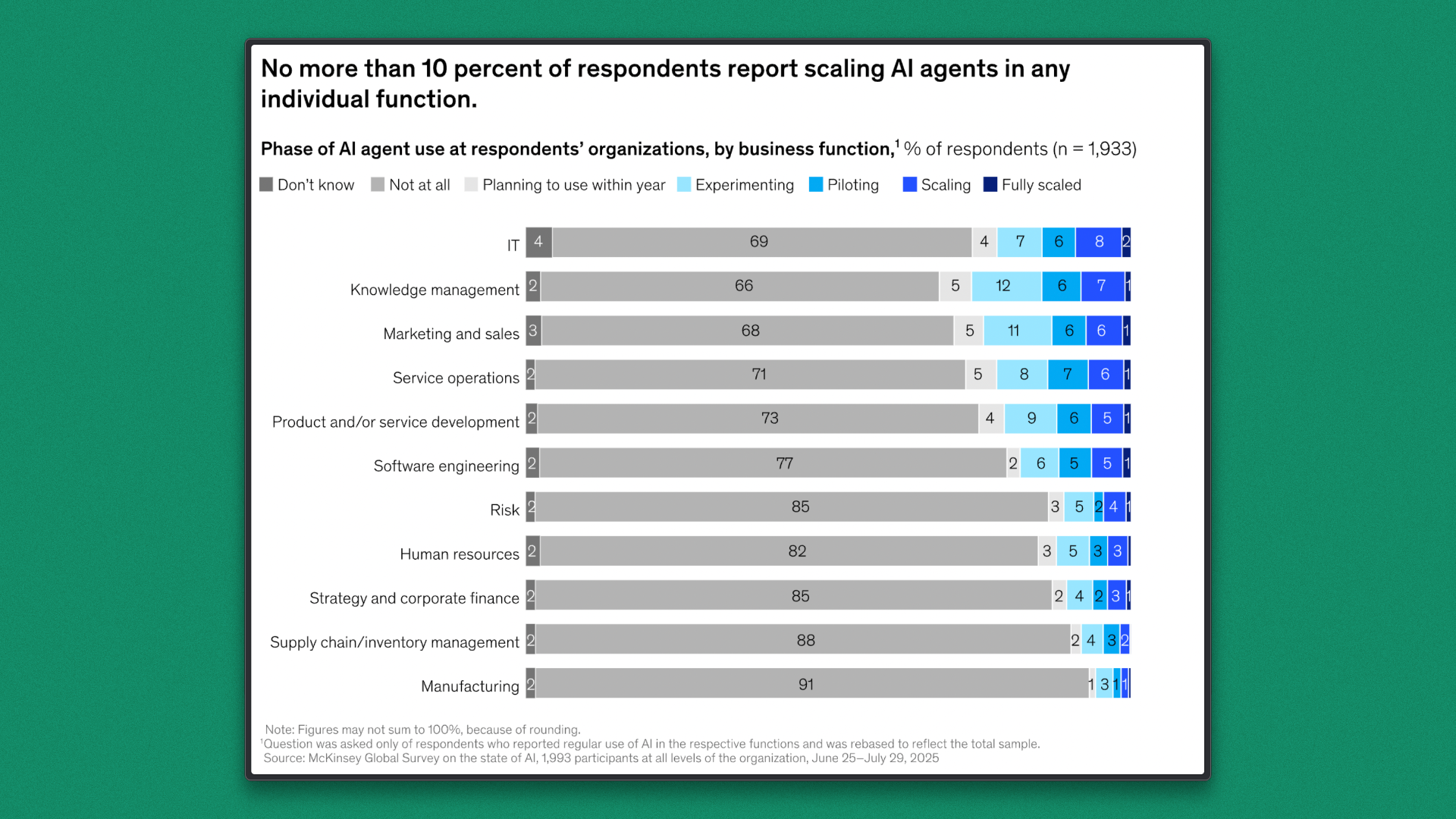

This growing integration is reflected in adoption patterns. According to a McKinsey 2025 report, approximately 23% of organizations report scaling AI agents in at least one business function, while another 39% are actively experimenting.

The AI Agent Tool Landscape in 2026

Building effective AI agents in 2026 depends on selecting the right set of supporting tools. Most real-world implementations rely on a small number of well-defined categories that handle coordination, data access, execution, model interaction, and monitoring.

Each category is illustrated with commonly used tools that represent how these capabilities are implemented in practice.

Here are some of the key categories and tools used to build and run AI agents in 2026.

1. Agentic Frameworks and Orchestration

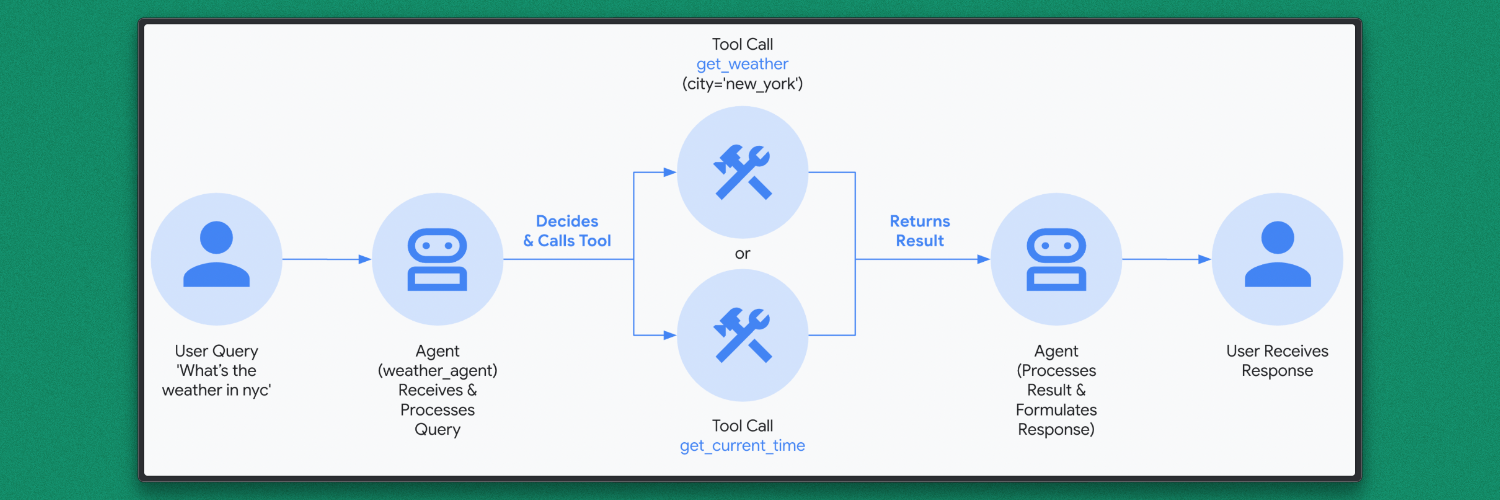

This category defines how AI agents plan tasks, manage control flow, invoke tools, and handle multi-step execution. Most real-world systems rely on one primary orchestration layer rather than multiple overlapping frameworks.

Commonly used frameworks in this category include:

1. LangChain + LangGraph

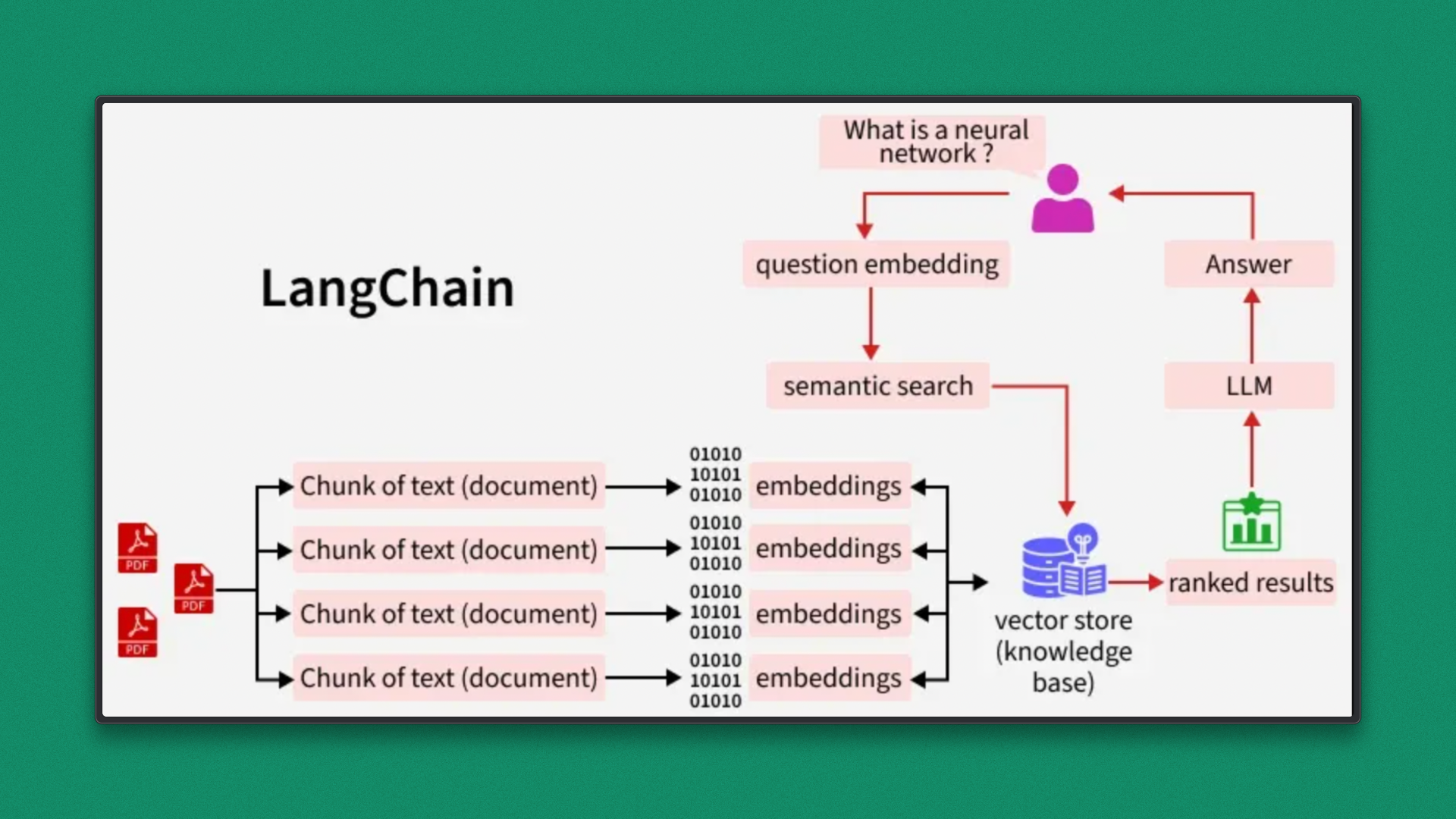

LangChain and LangGraph are typically used together as a single orchestration layer for building agent-driven systems.

LangChain focuses on the interaction surface between language models and external capabilities. It provides abstractions for prompt templates, tool calling, model routing, and lightweight memory, making it effective for wiring models to APIs, databases, search tools, and code execution environments.

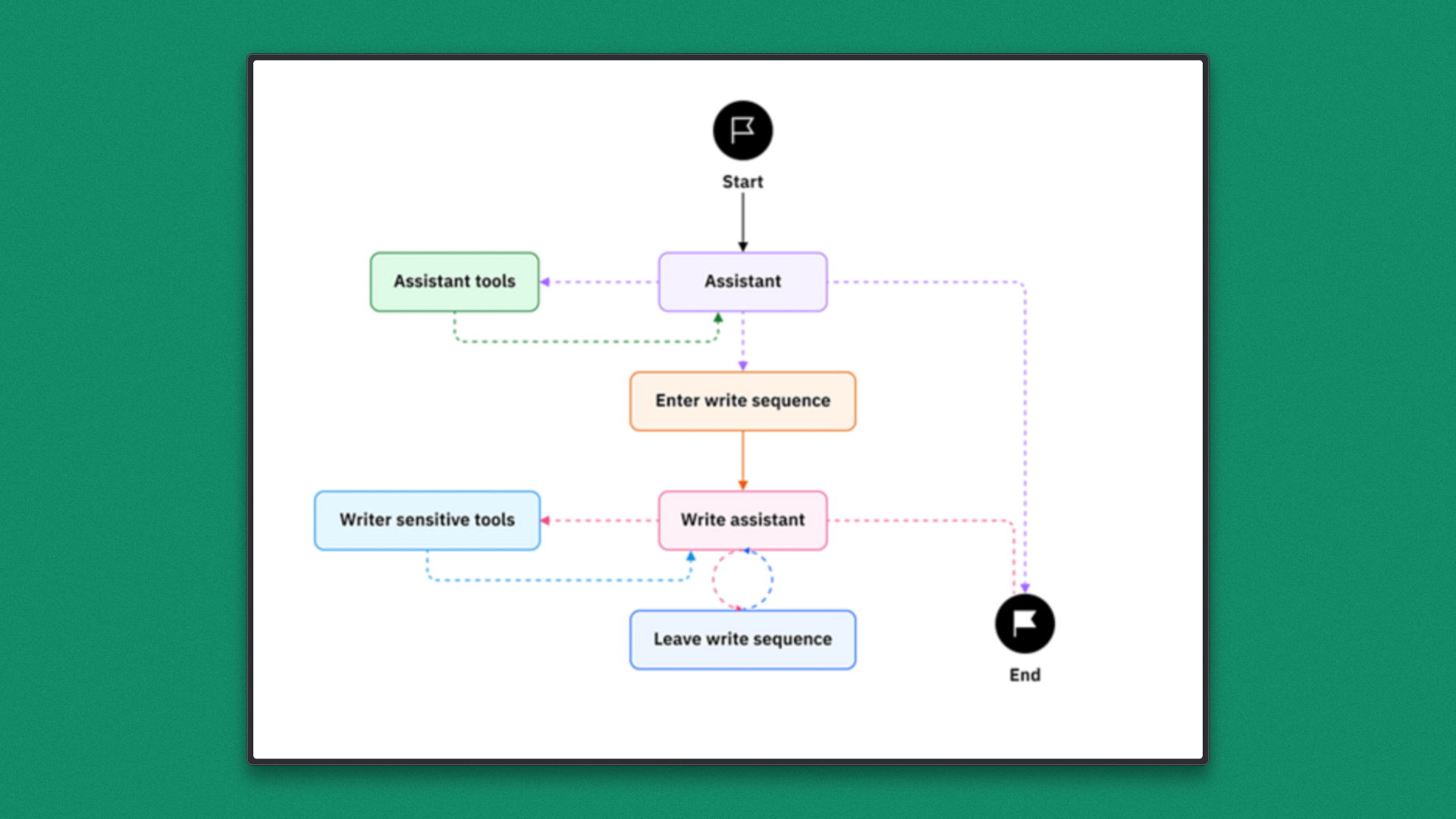

LangGraph addresses a different set of concerns. It introduces explicit state and control flow, allowing agent behavior to be represented as graphs rather than linear chains.

This enables modeling of branching decisions, retries, checkpoints, and the pause-resume execution. LangGraph is particularly useful for long-running workflows, agents that depend on intermediate results, or scenarios that require human review before proceeding.

Together, this pairing supports agents that need both flexibility and structure. LangChain handles how the agent interacts with models and tools, while LangGraph governs how those interactions unfold over time. This combination is commonly used for production agents that operate across multiple steps, rely on tool outputs, and require predictable execution behavior.

2. Google ADK (Agent Development Kit)

Google ADK supports the development of structured, production-grade AI agents within the Google ecosystem. It provides abstractions for defining agent behavior, tool usage, and multi-step workflows, with an emphasis on integration with existing services and infrastructure.

Google ADK emphasizes controlled execution and composability. Agents built with ADK typically operate within clearly defined boundaries, which suits enterprise and platform-centric workflows where reliability and governance are important.

2. Execution and Data Infrastructure

This category underpins how AI agents access data, execute tasks, and operate reliably at scale. While agentic frameworks define reasoning and control flow, data and execution infrastructure determine whether those agents can operate on large datasets, execute complex operations, and respond within practical latency and cost constraints.

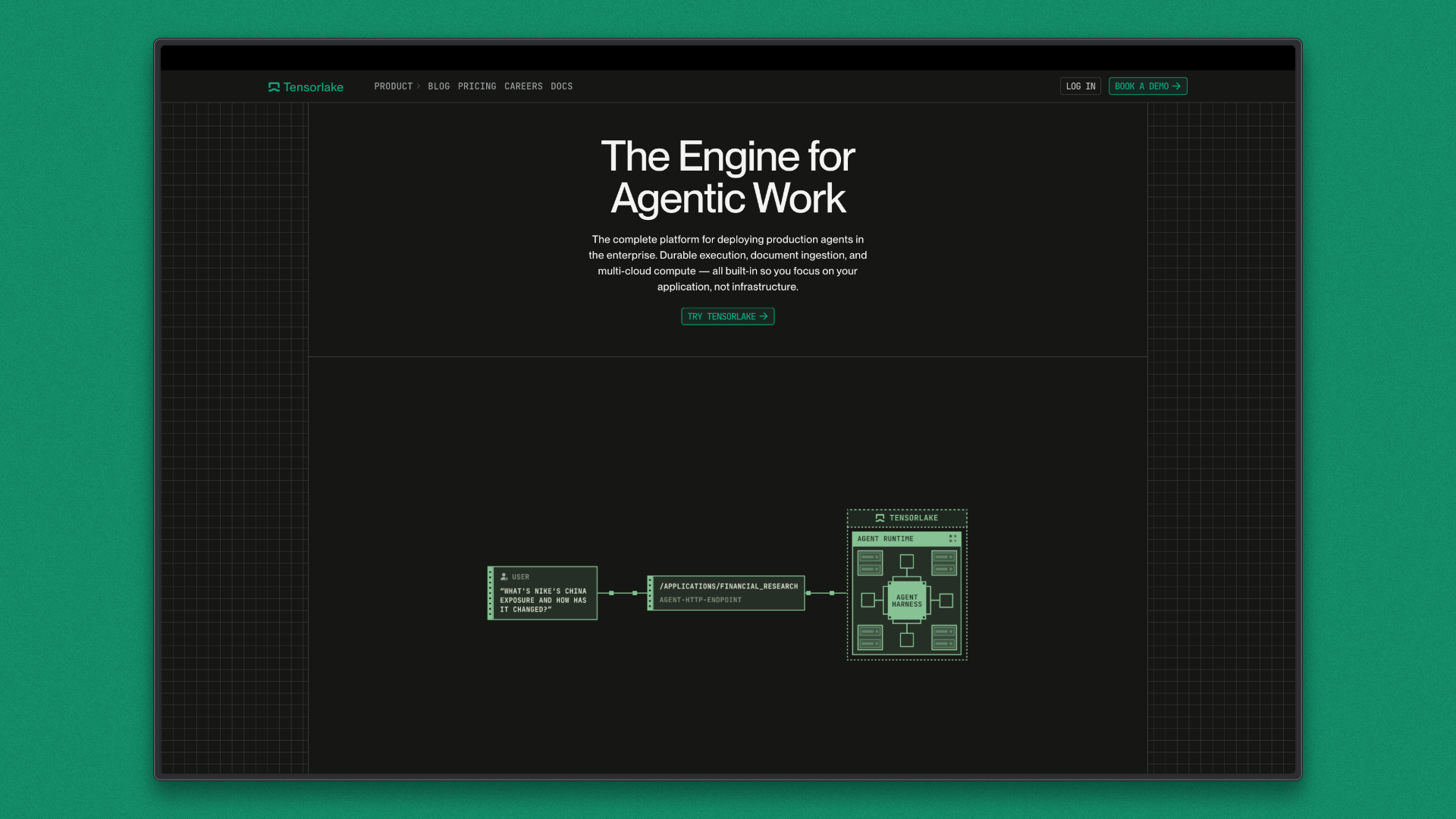

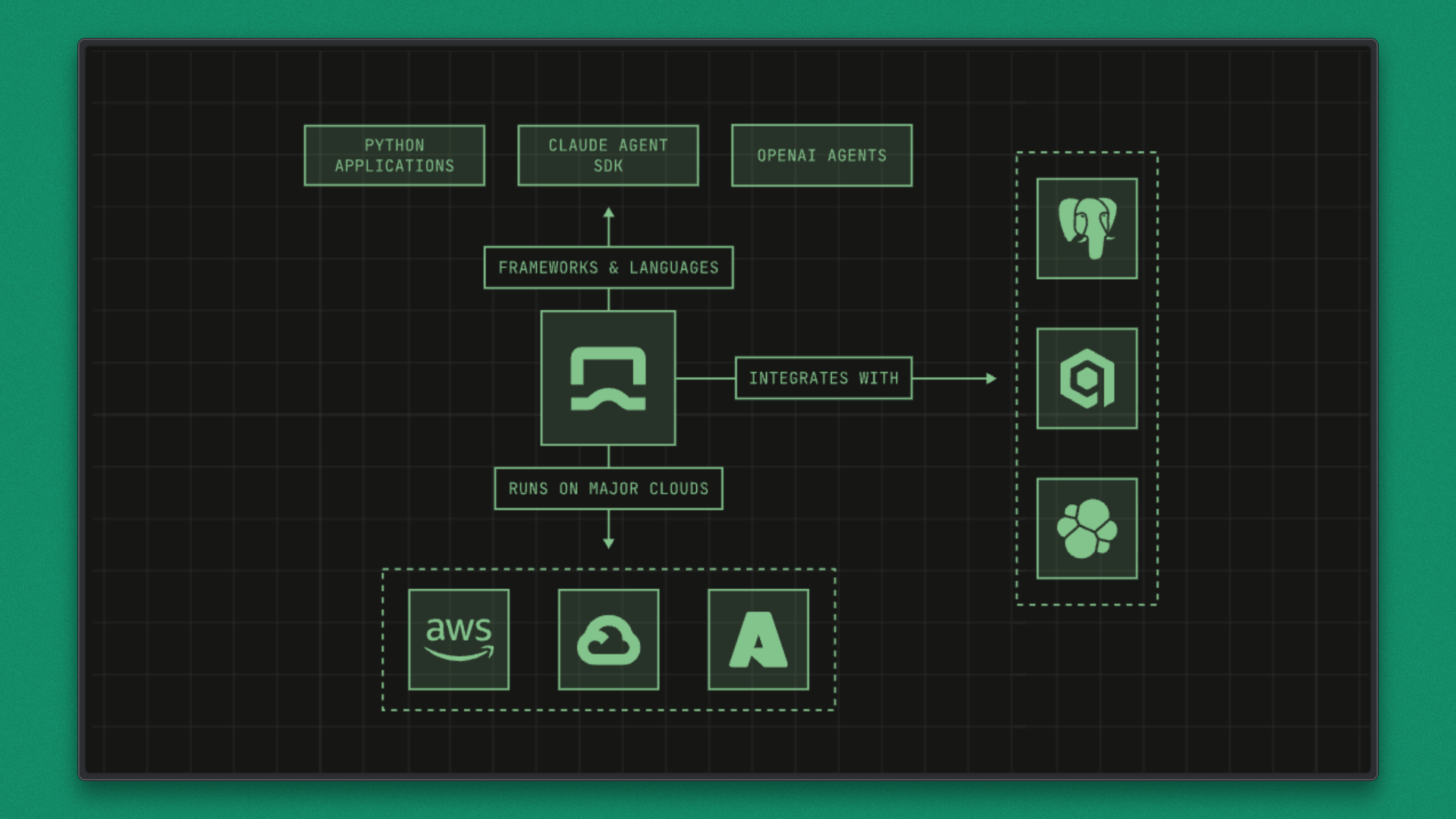

1. TensorLake

Tensorlake is a unified data and execution platform built for AI and agent-driven workflows that rely heavily on documents and unstructured data. It is designed for production environments where ingestion accuracy, execution durability, and traceability are essential.

Rather than serving as a lightweight preprocessing step, Tensorlake supports end-to-end data handling for agent-based systems.

Core capabilities include:

- A durable, serverless execution environment for long-running agentic workflows, with built-in state checkpointing and autoscaling to support retries, parallelism, and multi-step processing.

- A Document Ingestion API that converts PDFs, images, spreadsheets, and scanned documents into structured formats such as JSON or Markdown. This enables agents to reason over consistent, machine-readable representations instead of raw files.

- Layout-aware parsing and vision language models (VLMs) that capture document structure, including tables, figures, headers, and multi-column layouts, reducing noise and improving extraction quality.

- Document citations and field tracing that link extracted fields and outputs back to exact locations in the source documents, supporting auditability and compliance-driven workflows.

- Secure code sandboxes that allow agents to safely execute auto-generated or untrusted code for data exploration, transformation, or validation without exposing underlying systems.

- Scalable serverless compute designed for high-throughput parallel processing, enabling large document collections and data pipelines to be processed efficiently and reused across workflows.

Together, these capabilities allow Tensorlake to function as the data and runtime foundation for agentic applications, supporting reliable execution, structured outputs, and traceable results at scale.

3. Memory, Retrieval, and Knowledge Systems

This category defines how AI agents store information, retrieve relevant context, and maintain continuity across interactions. These systems directly influence how grounded, consistent, and reliable an agent feels during multi-step and long-running workflows.

Commonly used tools in this category include

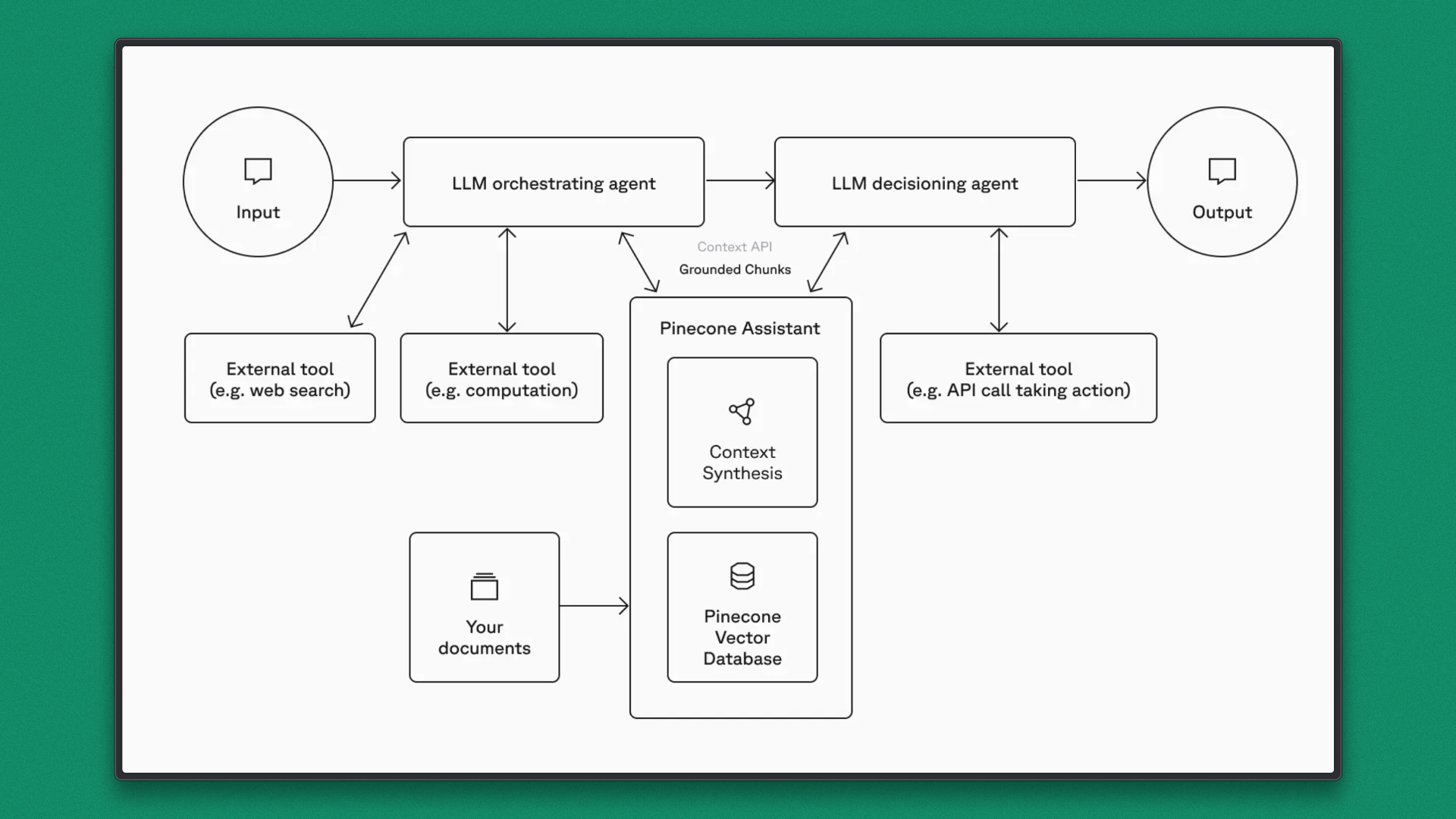

1. Pinecone

Pinecone is widely used for semantic retrieval in agent-based systems. It enables fast similarity search over high-dimensional embeddings, allowing agents to ground responses in documents, logs, or prior conversations. Its managed nature removes much of the operational overhead associated with scaling vector search.

In practice, Pinecone is often used when low latency and predictable performance are required. Agent workflows that depend on frequent retrieval across large datasets benefit from its indexing and query optimizations, particularly in production environments with variable traffic.

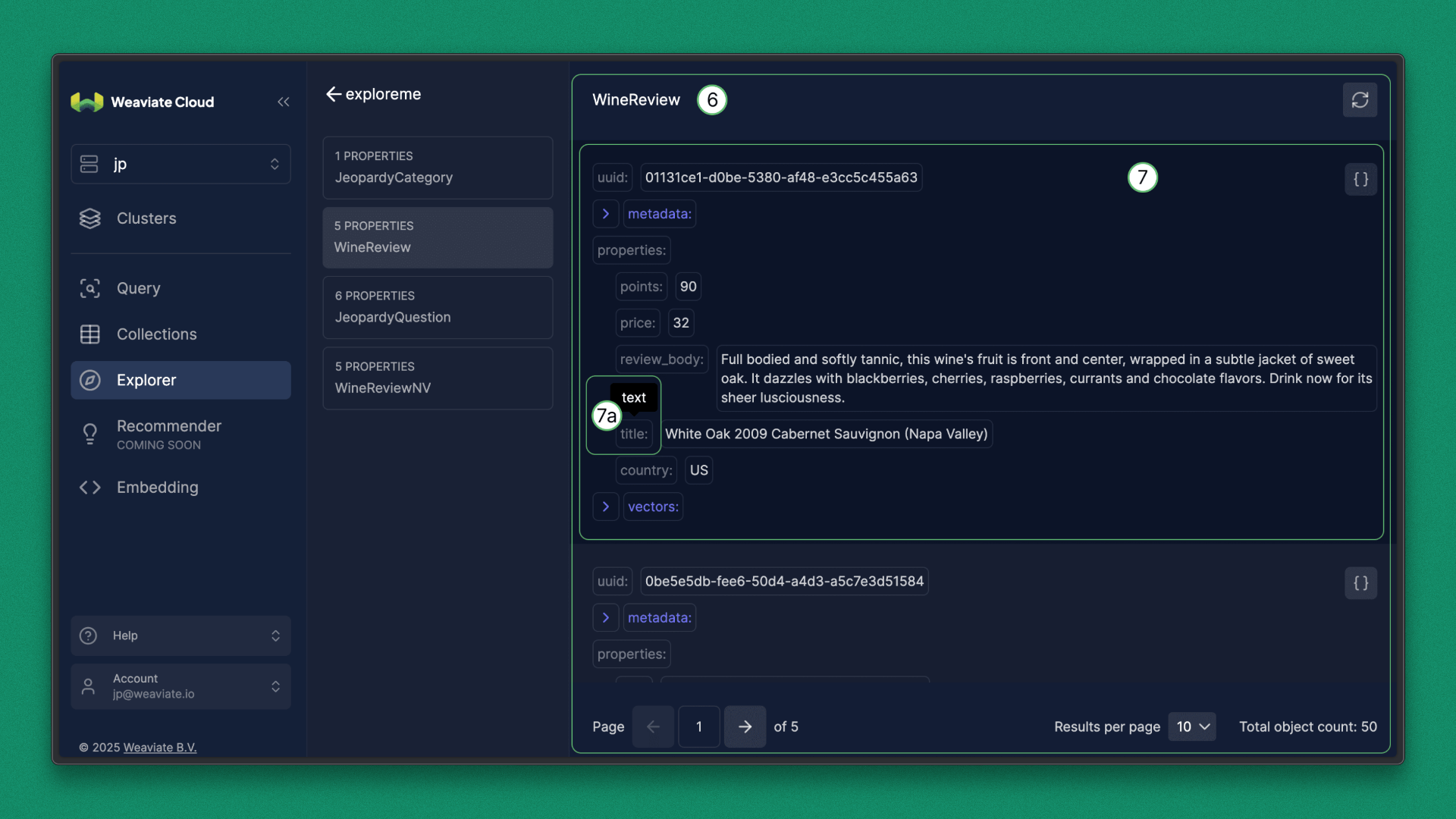

2. Weaviate

Weaviate extends vector search by combining semantic similarity with structured filtering. This allows agents to retrieve information based on meaning while applying constraints such as metadata fields, access rules, or time windows.

This hybrid approach is useful in enterprise settings where agents must balance structure and relevance. Weaviate is often used when retrieval needs to balance semantic understanding with deterministic filtering and schema awareness.

3. Zep

Zep focuses specifically on long-term memory for AI agents. It stores conversations, summaries, and interaction history in a structured format that agents can query over time. This makes Zep useful for agents that need continuity across sessions or personalization across repeated interactions. Rather than treating memory as raw embeddings, it emphasizes structured recall and session-aware context management.

Together, these tools support short-term context, long-term memory, and structured knowledge, enabling agents to reason more consistently across complex and extended workflows.

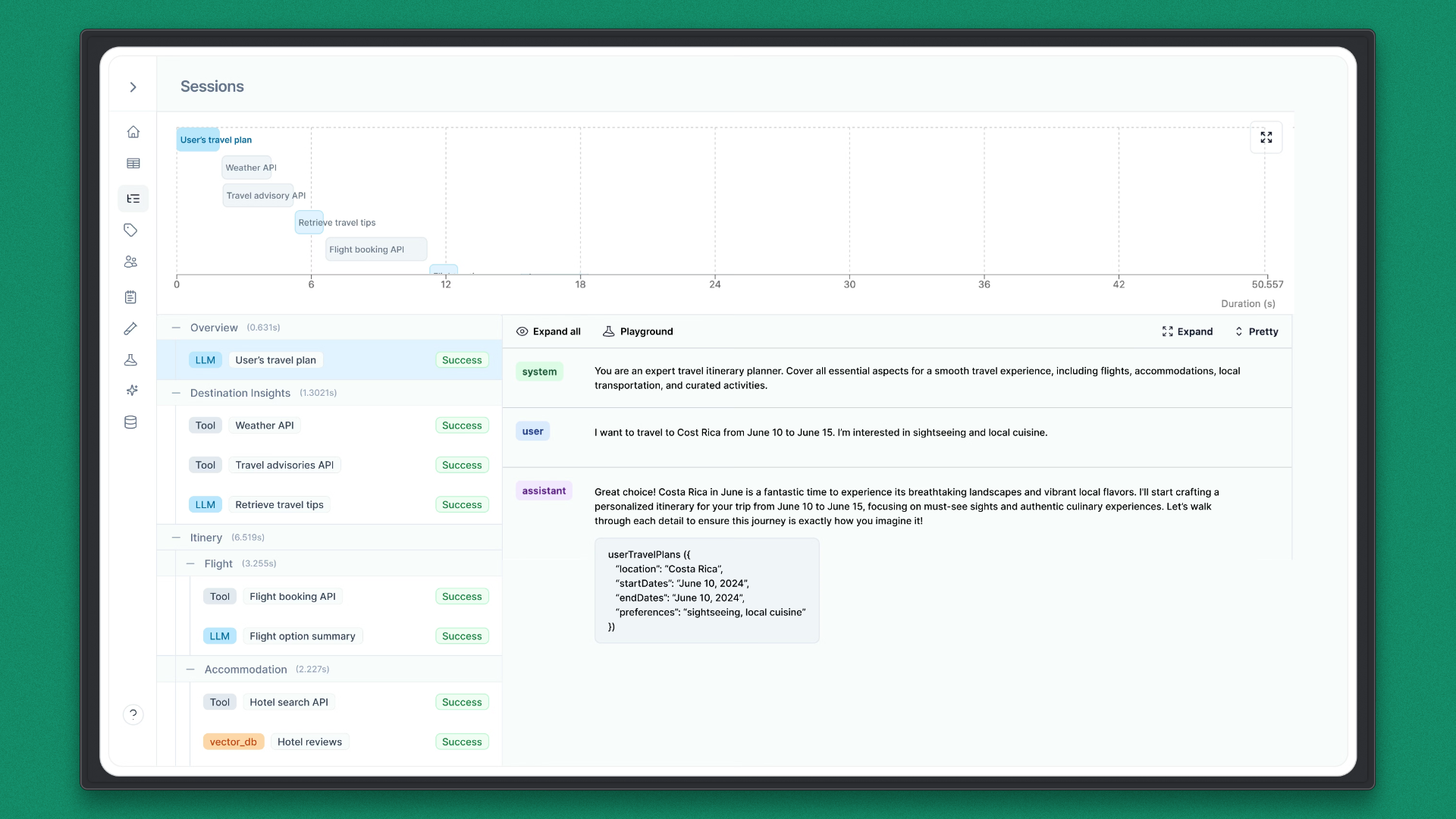

4. Observability, Evaluation, and Guardrails

As AI agents move beyond single responses into multi-step workflows, understanding how they behave becomes critical. Observability and evaluation tools help track decisions, diagnose failures, compare runs, and enforce constraints, making agent systems more predictable and trustworthy in production.

Commonly used tools in this category include:

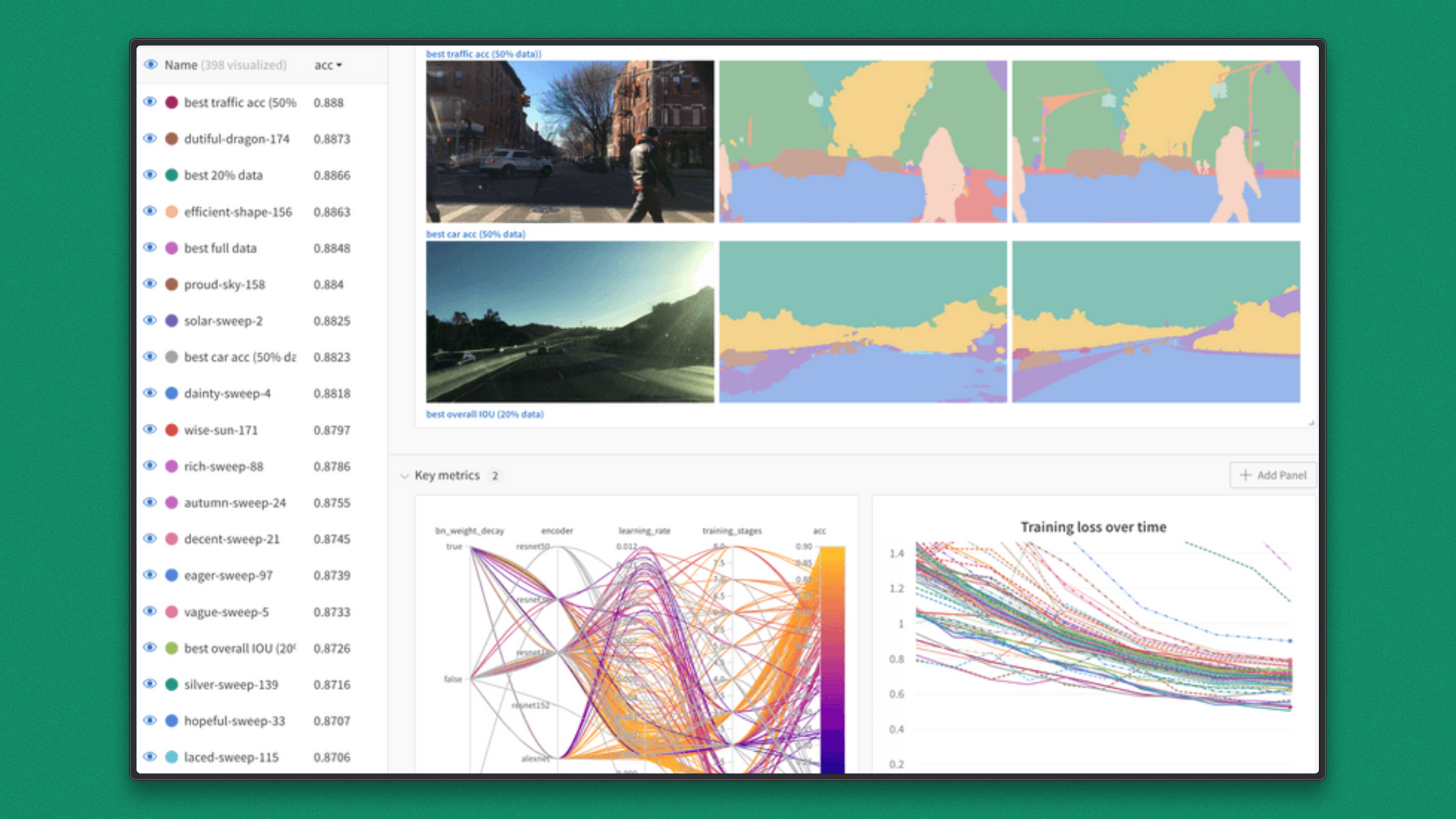

1. Weights & Biases

Weights & Biases is widely used to track experiments, model behavior, and system performance in AI-driven applications. In agent systems, it is often used to log prompts, intermediate steps, tool outputs, and final responses across runs, providing visibility into how agents behave over time.

This level of tracking helps identify regressions, unexpected behaviors, and performance bottlenecks.

It is particularly useful when agents are updated frequently or deployed across multiple workflows, where consistent evaluation and comparison become difficult without centralized observability.

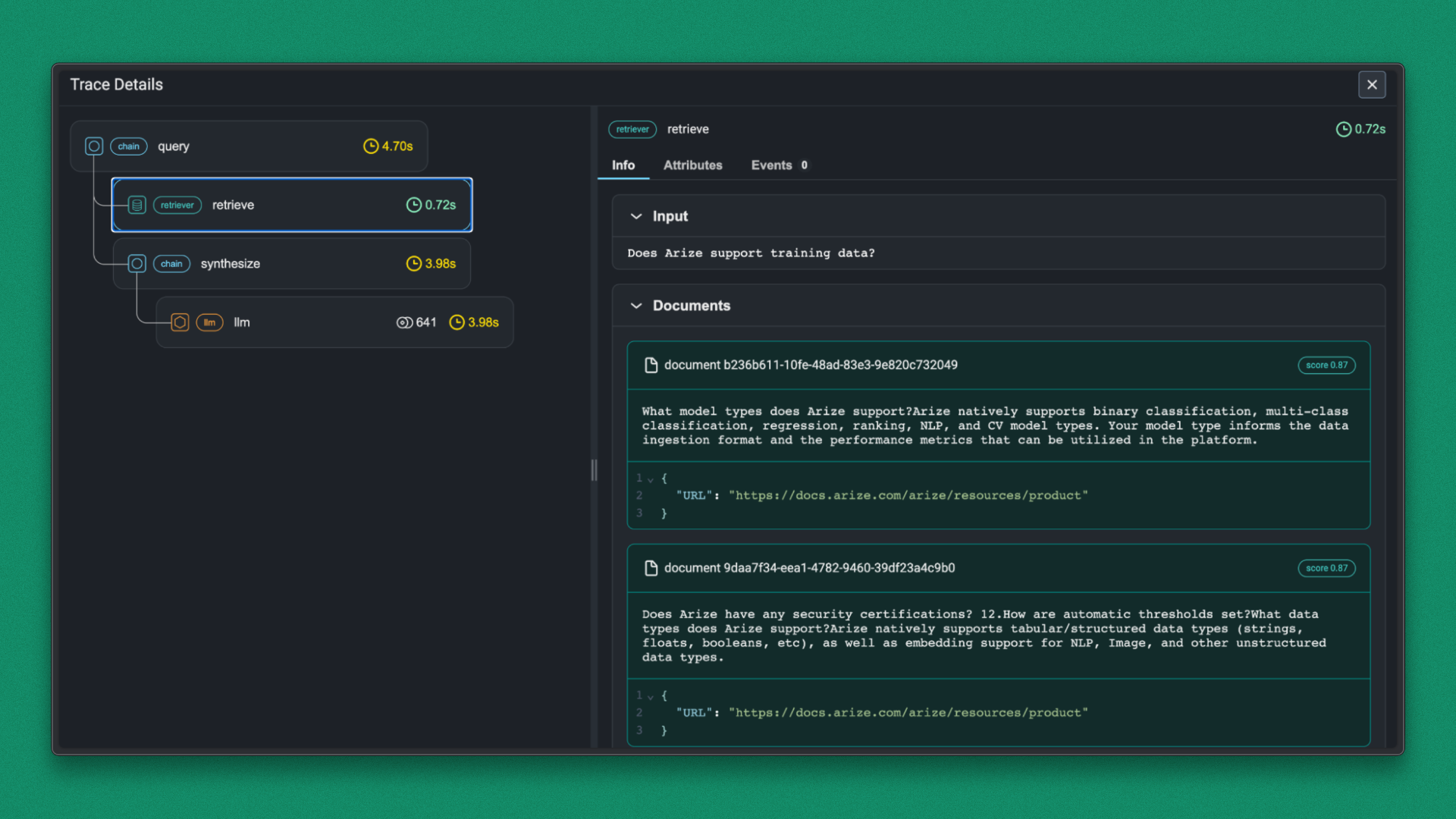

2. Arize

Arize focuses on monitoring and evaluating machine learning systems in production. In the context of AI agents, it is used to track output quality, detect drift, and monitor performance changes as data or behavior evolves. It supports continuous evaluation strategies that help ensure agents remain reliable over time. This is especially relevant for long-running or user-facing agents, where subtle degradations can accumulate without clear signals.

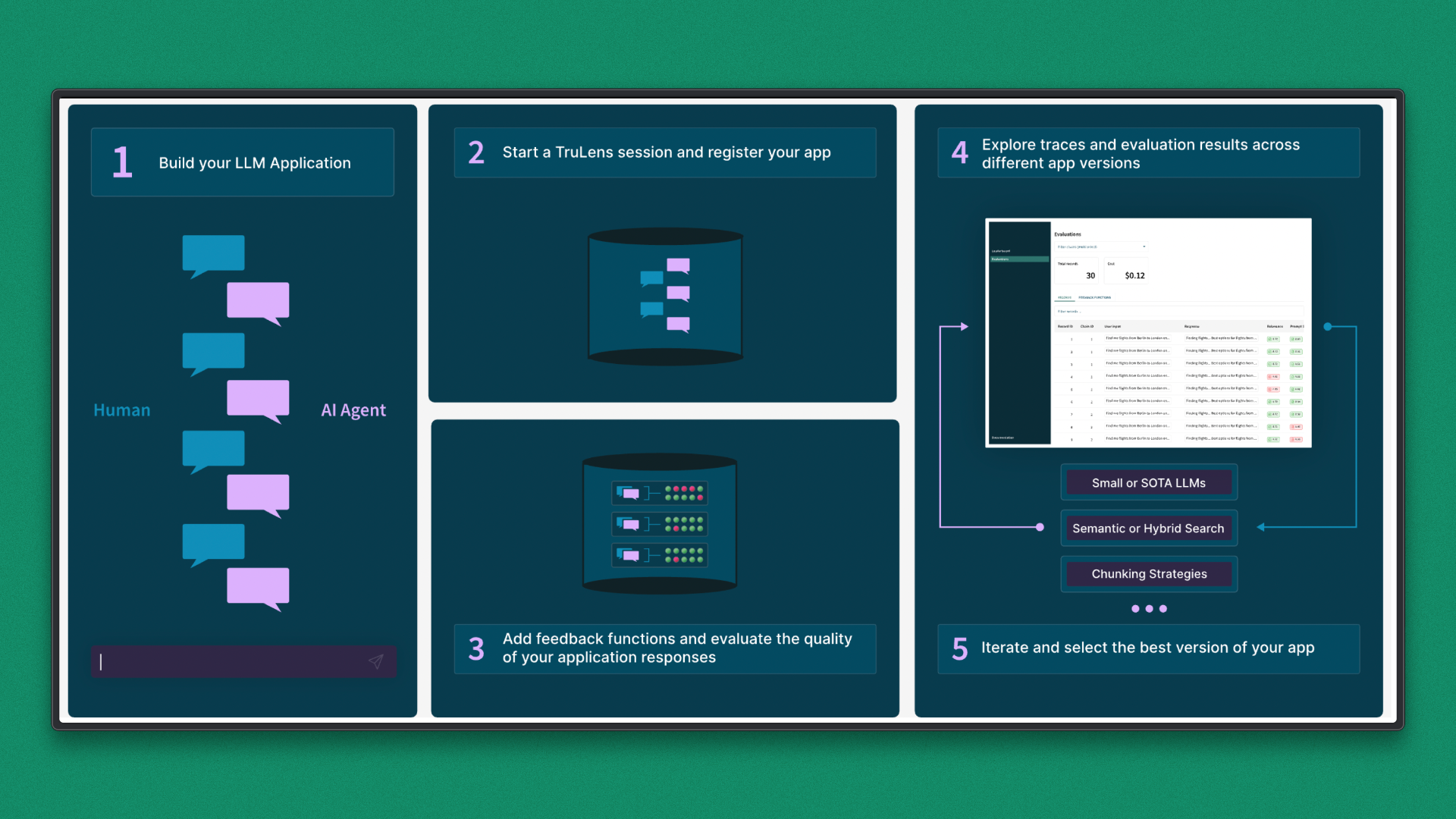

3. TruLens

TruLens is an evaluation framework designed to assess LLM and agent outputs against defined quality metrics. It enables teams to score responses based on relevance, correctness, groundedness, or custom rules.

In agent systems, TruLens is often employed in automated testing or guardrail pipelines. It helps validate agent behavior prior to deployment and provides ongoing checks to ensure outputs meet expected standards as workflows become more complex.

4. Helicone

Helicone provides request-level observability for LLM usage, including latency, cost, and error tracking. It gives visibility into how agents interact with models across different workflows. For agent systems, Helicone helps identify performance issues, unexpected costs, and reliability problems without interfering with execution or data pipelines.

Other Noteworthy Tools in Agent Workflows

Not every important agent tool fits into orchestration, memory, or runtime categories. Some tools quietly solve problems around integration, learning from usage, feedback, and reliability. These tools often determine whether agents remain prototypes or mature into dependable systems.

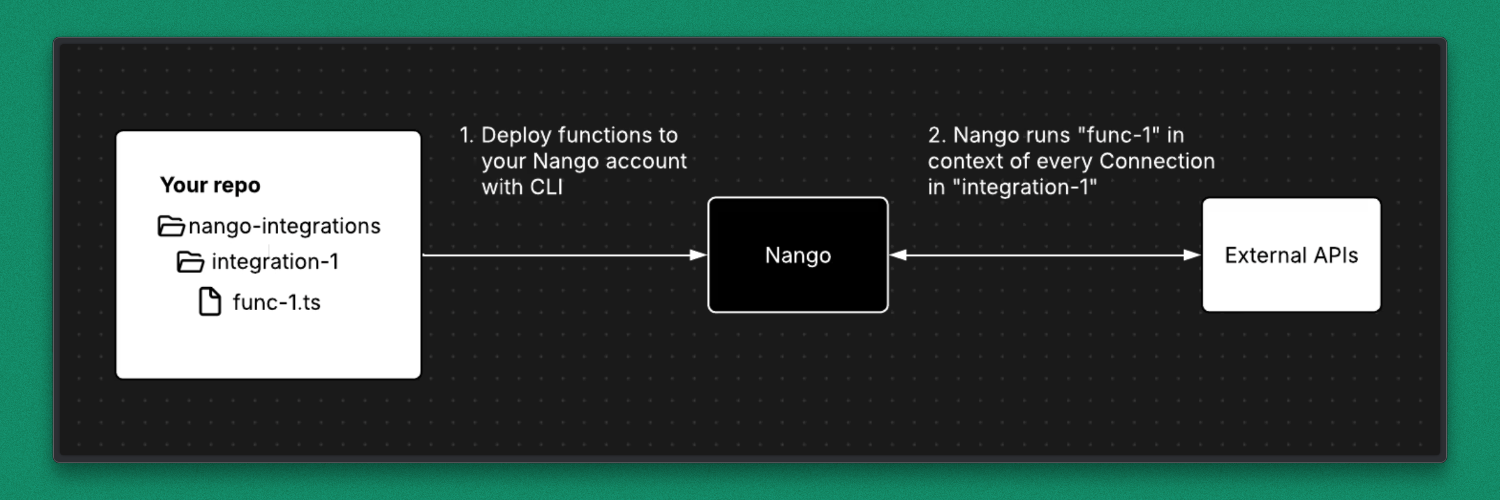

1. Nango

Nango addresses one of the most fragile layers in agent systems: external integrations. It abstracts authentication, token refresh, and provider-specific API behavior behind a unified interface, allowing agents to interact with SaaS products and internal services without embedding custom logic.

As agents begin to take actions across multiple systems, integration reliability becomes critical. Nango centralizes credential handling and integration logic, reducing long-term maintenance overhead and minimizing unexpected failures during resource-intensive workflows.

It also helps standardize how agents interact with third-party services as their scope expands.

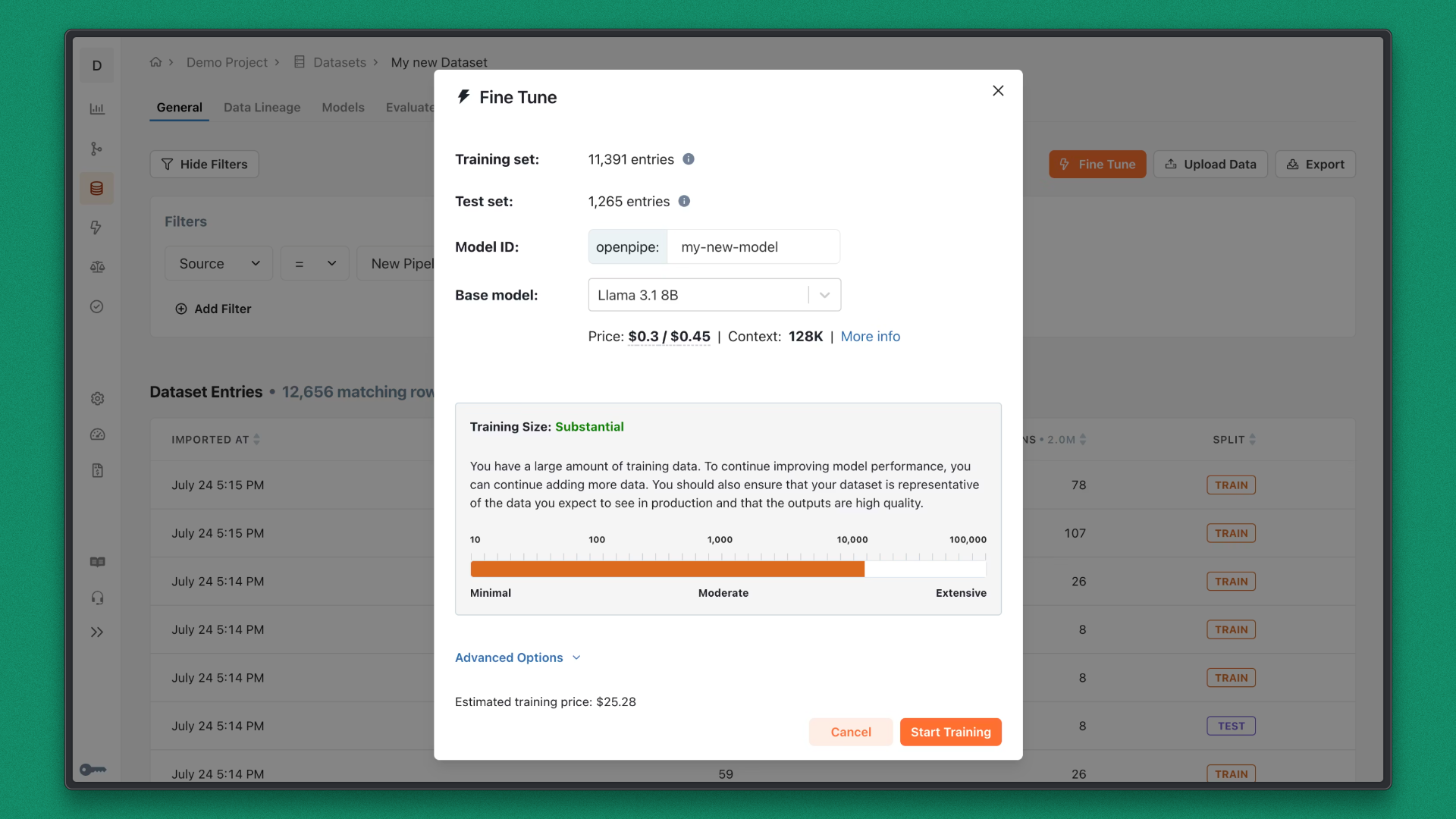

2. OpenPipe

OpenPipe focuses on learning from real agent usage. It captures prompts, responses, and outcomes from live systems and converts them into structured datasets for fine-tuning or model improvement.

This approach enables agent behavior to improve through real-world interactions rather than synthetic examples.

OpenPipe is particularly useful in domain-specific systems where repeated failure patterns can be addressed through model adaptation rather than prompt adjustments alone.

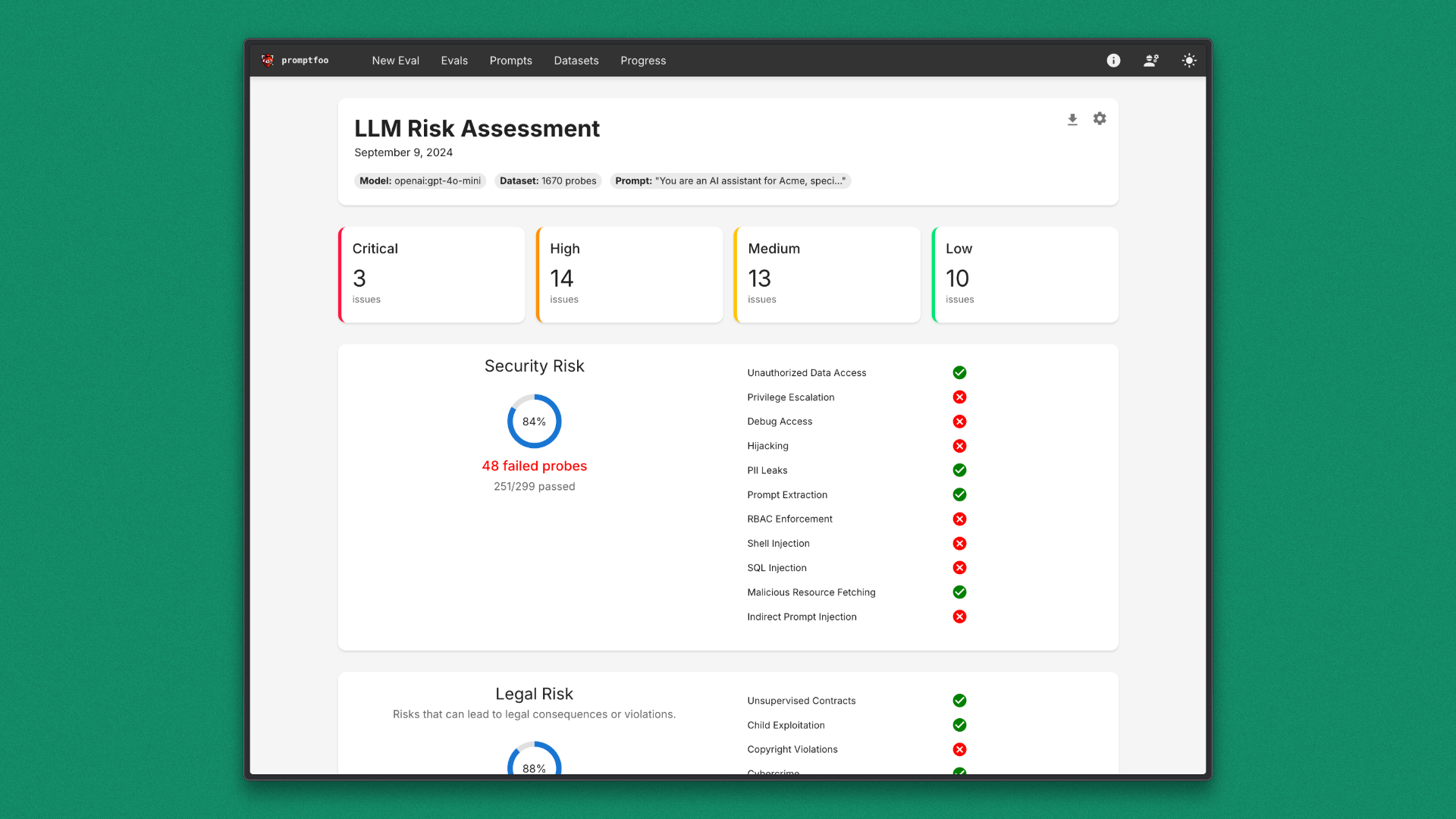

3. Promptfoo

Promptfoo is designed to test and compare agent behavior across prompts, models, and configurations. It helps surface regressions and unexpected changes as systems evolve. In agent-based workflows where small changes can have cascading effects, Promptfoo provides a practical way to validate behavior before updates reach production.

How to Choose the Right Tools for Your Task

Every AI agent behaves differently in real workflows. Some complete a single action and exit, whereas others proceed through multiple steps, revisiting data and decisions over time. Tool choices should follow this pattern, with orchestration and execution reliability becoming increasingly important as agents become longer-lived and more complex.

The nature of the data quickly shapes the rest of the stack. Agents working with large document collections, logs, or analytics pipelines require robust ingestion and execution layers, where platforms such as TensorLake help manage document processing, durable execution, and repeatable workflows. Agents focused on reasoning or coordination place greater emphasis on orchestration and memory systems, with less reliance on intensive data handling.

Long-term success depends on how the agent is maintained and improved. Systems that run continuously or interact with users require observability, testing, and feedback loops to prevent silent failures.

Conclusion

AI agents in 2026 are no longer defined by isolated frameworks or features. Their effectiveness comes from how well different parts of the system work together, from coordination and data handling to execution and evaluation. As agents adopt longer, more complex workflows, thoughtful system design is more important than adding new layers of agent logic.

The most reliable agent systems are built with a clear understanding of their purpose and constraints. Choosing tools that simplify execution, enhance visibility, and support long-term maintenance enables agents to move beyond experimentation and deliver consistent value in real-world environments.

Related articles

Get server-less runtime for agents and data ingestion

Tensorlake is the Agentic Compute Runtime the durable serverless platform that runs Agents at scale.