Unlocking Smarter RAG with Qdrant + Tensorlake: Structured Filters Meet Semantic Search

TL;DR

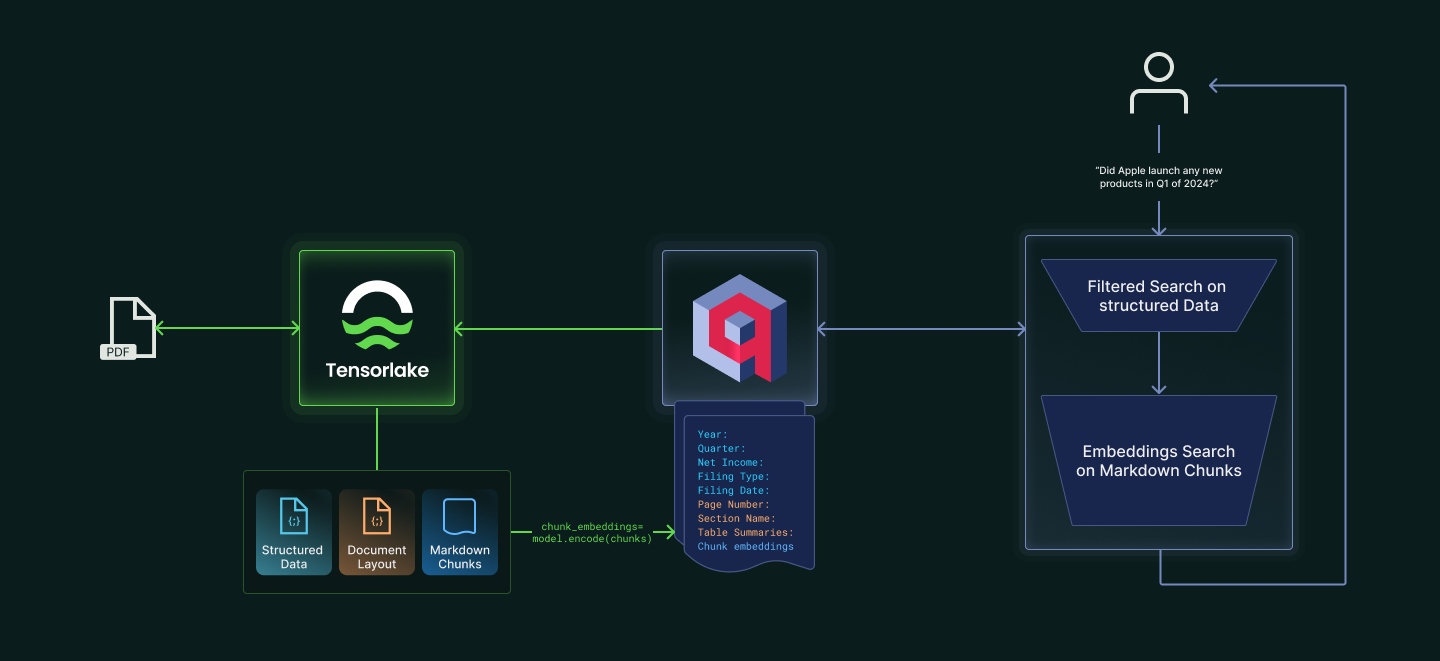

RAG is evolving—and simple embedding lookup doesn’t cut it anymore. Learn how to build production-grade retrieval workflows by combining Tensorlake’s rich document understanding and Qdrant’s hybrid search and filtering. Parse PDFs, extract structured data, and perform filtered semantic search—all in just a few lines of code.

RAG isn't new. But the bar for what constitutes "good RAG" has risen dramatically. Simple embedding lookup was sufficient in 2023, but today's applications demand far more sophisticated retrieval to avoid hallucinations and irrelevant responses.

Modern baseline RAG requires:

- Hybrid search: Vector + full-text search for comprehensive coverage

- Rich metadata filtering: Structured data to narrow search scope before retrieval

- Table and figure understanding: Complex visual content properly parsed, summarized, and embedded

- Global document context: Every chunk enriched with document-level metadata

To keep up with modern RAG, you are likely chaining multiple model calls with orchestration systems, requiring you to manage state between calls by writing intermediate outputs to some storage systems. This requires ongoing maintenance and some amount of infrastructure work.

With Tensorlake, you get the power of the best models without needing to maintain any infrastructure. Tensorlake delivers everything you need to build modern RAG in a single API call. In this post, we'll show you how to implement next-level RAG with structured filtering, semantic search, and comprehensive document understanding, all integrated seamlessly.

Beyond simple embedding lookup: What modern RAG demands

Traditional RAG implementations that rely solely on vector similarity often fail in production because they miss critical context and retrieve irrelevant passages. When dealing with complex documents like financial reports, research papers, or legal contracts, you need structured filters to narrow your search space before running semantic queries.

Tensorlake extracts structured data, parses complex tables and figures into markdown (with summaries), and provides document-level metadata in a single API call. Combined with Qdrant's filtering and hybrid search capabilities, you get production-grade RAG without building complex parsing pipelines.

Try it yourself: Academic research papers demo

To show how simple this really is, we put together a live demo using academic research papers about computer science education. You can try this out for yourself using this Colab Notebook.

This example demonstrates modern RAG requirements with complex documents that have:

- Complex reading order: Author names in columns with mixed affiliations, text in multiple columns

- Critical figures and tables: Data that's essential for accurate embeddings (already parsed into markdown)

- Rich metadata: Conference info, authors, universities for precise filtering

We'll build a hybrid search system (with structured filters) that can answer questions like "Does computer science education improve problem solving skills?" while filtering by specific authors, conferences, or years.

It's really just three quick steps:

- Parse documents with Tensorlake

- Create embeddings and upsert to Qdrant

- Perform hybrid search with structured filtering

Step 1: Parse documents with Tensorlake

First, define a schema for the structured data you want extracted. For research papers, we'll extract metadata like authors and conference information:

1[.code-block-title]Code[.code-block-title]from pydantic import BaseModel

2from typing import List

3

4class Author(BaseModel):

5 """Author information for a research paper"""

6 name: str = Field(description="Full name of the author")

7 affiliation: str = Field(description="Institution or organization affiliation")

8

9class Conference(BaseModel):

10 """Conference or journal information"""

11 name: str = Field(description="Name of the conference or journal")

12 year: str = Field(description="Year of publication")

13 location: str = Field(description="Location of the conference or journal publication")

14

15class ResearchPaperMetadata(BaseModel):

16 """Complete schema for extracting research paper information"""

17 authors: List[Author] = Field(description="List of authors with their affiliations. Authors will be listed below the title and above the main text of the paper. Authors will often be in multiple columns and there may be multiple authors associated to a single affiliation.")

18 conference_journal: Conference = Field(description="Conference or journal information")

19 title: str = Field(description="Title of the research paper")

20

21# Convert to JSON schema for Tensorlake

22json_schema = ResearchPaperMetadata.model_json_schema()Then, use Tensorlake's Document Ingestion API to parse research paper PDFs and extract both structured JSON data and markdown chunks:

1[.code-block-title]Code[.code-block-title]file_path = "path/to/your/research_paper.pdf"

2file_id = doc_ai.upload(path=file_path)

3

4# Configure parsing options

5parsing_options = ParsingOptions(

6 chunking_strategy=ChunkingStrategy.SECTION,

7 table_parsing_strategy=TableParsingFormat.TSR,

8 table_output_mode=TableOutputMode.MARKDOWN,

9)

10

11# Create structured extraction options with the JSON schema

12structured_extraction_options = [StructuredExtractionOptions(

13 schema_name="ResearchPaper",

14 json_schema=json_schema,

15)]

16

17# Create enrichment options

18enrichment_options = EnrichmentOptions(

19 figure_summarization=True,

20 figure_summarization_prompt="Summarize the figure beyond the caption by describing the data as it relates to the context of the research paper.",

21 table_summarization=True,

22 table_summarization_prompt="Summarize the table beyond the caption by describing the data as it relates to the context of the research paper.",

23)

24

25# Initiate the parsing job

26parse_id = doc_ai.parse(file_url, parsing_options, structured_extraction_options, enrichment_options)

27result = doc_ai.wait_for_completion(parse_id)

28

29# Collect results - structured data and markdown chunks (table and figure summaries already in chunks)

30structured_data = result.structured_data[0] if result.structured_data else {}

31chunks = result.chunks if result.chunks = result.chunks else []Step 2: Create embeddings and upsert to Qdrant

Create embeddings for markdown chunks (which include table and figure summaries) and store in Qdrant with structured metadata for filtering:

1[.code-block-title]Code[.code-block-title]from sentence_transformers import SentenceTransformer

2from qdrant_client import QdrantClient, models

3from uuid import uuid4

4

5# Initialize embedding model and Qdrant client

6model = SentenceTransformer("all-MiniLM-L6-v2")

7qdrant_client = QdrantClient(url="your-qdrant-url", api_key="your-api-key")

8

9def create_qdrant_collection(structured_data, chunks):

10 # Create collection if it doesn't exist

11 collection_name = "research_papers"

12 if not qdrant_client.collection_exists(collection_name):

13 qdrant_client.create_collection(

14 collection_name=collection_name,

15 vectors_config=models.VectorParams(size=384, distance=models.Distance.COSINE)

16 )

17

18 # Extract structured metadata for global document context

19 author_names = []

20 conference_name = ""

21 conference_year = ""

22 title = ""

23

24 if structured_data:

25 # Extract author information for filtering

26 if 'authors' in structured_data.data:

27 for author in structured_data.data['authors']:

28 if isinstance(author, dict):

29 author_name = author.get('name', '')

30 author_names.append(author_name)

31

32 # Extract conference information for filtering

33 if 'conference_journal' in structured_data.data:

34 conf = structured_data.data['conference_journal']

35 if isinstance(conf, dict):

36 conference_name = conf.get('name', '')

37 conference_year = conf.get('year', '')

38

39 title = structured_data.data.get('title', '')

40

41 points = []

42

43 # Create embeddings for markdown chunks (tables/figures already included)

44 chunk_texts = [chunk.content for chunk in chunks]

45 vectors = model.encode(chunk_texts).tolist()

46

47 # Process each chunk with global document context

48 for i, chunk in enumerate(chunk_texts):

49 # Enhanced payload with structured metadata for filtering

50 payload = {

51 "content": chunk,

52 "document_index": doc_idx,

53 # Structured data fields for filtering

54 "title": title,

55 "author_names": author_names, # List for filtering by specific authors

56 "conference_name": conference_name,

57 "conference_year": conference_year

58 }

59

60 points.append(models.PointStruct(

61 id=str(uuid4()),

62 vector=vectors[i],

63 payload=payload

64 ))

65

66 # Insert data into Qdrant

67 qdrant_client.upsert(collection_name=collection_name, points=points)

68

69 # Create indices for efficient hybrid search and filtering

70 qdrant_client.create_payload_index(collection_name, "title")

71 qdrant_client.create_payload_index(collection_name, "author_names")

72 qdrant_client.create_payload_index(collection_name, "conference_name")

73 qdrant_client.create_payload_index(collection_name, "conference_year")Step 3: Perform hybrid search with structured filtering

Now you can perform both simple queries and filtered searches and test the results.

Simple search

1[.code-block-title]Code[.code-block-title]points = qdrant_client.query_points(

2 collection_name="research_papers",

3 query=model.encode("Does computer science education improve problem solving skills?").tolist(),

4 limit=3,

5).points

6

7for point in points:

8 print(point.payload.get('title', 'Unknown'), "score:", point.score)With the output:

1[.code-block-title]Output[.code-block-title]CodeSpells: Bridging Educational Language Features with Industry-Standard Languages score: 0.57552844

2CHILDREN'S PERCEPTIONS OF WHAT COUNTS AS A PROGRAMMING LANGUAGE score: 0.55624765

3Experience Report: an AP CS Principles University Pilot score: 0.54369175Filtered Search

1[.code-block-title]Code[.code-block-title]points = qdrant_client.query_points(

2 collection_name="research_papers",

3 query=model.encode("Does computer science education improve problem solving skills?").tolist(),

4 query_filter=models.Filter(

5 must=[

6 models.FieldCondition(

7 key="author_names",

8 match=models.MatchValue(

9 value="William G. Griswold",

10 ),

11 )

12 ]

13 ),

14 limit=3,

15).points

16

17for point in points:

18 print(point.payload.get('title', 'Unknown'), point.payload.get('conference_name', 'Unknown'), "score:", point.score)With the output:

1[.code-block-title]Output[.code-block-title]CodeSpells: Bridging Educational Language Features with Industry-Standard Languages Koli Calling '14 score: 0.57552844

2CODESPELLS: HOW TO DESIGN QUESTS TO TEACH JAVA CONCEPTS Consortium for Computing Sciences in Colleges score: 0.4907498

3CodeSpells: Bridging Educational Language Features with Industry-Standard Languages Koli Calling '14 score: 0.4823265Modern RAG in production: Structured + semantic

Building production-grade RAG requires more than embedding models and vector search. You need structured metadata extraction, hybrid search capabilities, comprehensive document understanding, and global context—all integrated seamlessly.

Whether you're building an agent that searches academic literature, audits financial reports, or summarizes patient history, the combination of Tensorlake's document understanding and Qdrant's hybrid search gives you the foundation for reliable, accurate RAG systems.

With documents like research papers, you can see how a sinlge API call to Tensorlake for complete markdown conversion, structured data extraction, and table and figure summarization can provide the rich context modern RAG demands. You get complete, accurate data while reducing pipeline complexity.

Next Steps: Try Tensorlake

When you sign up with Tensorlake you get 100 free credits so that you can parse your own documents.

Explore the rest of our cookbooks and learn how to make vector database search more effective for your RAG workflows today. Start with the research papers colab notebook or build your own workflow from the Tensorlake API docs.

Got feedback or want to show us what you built? Join the conversation in our Slack Community!

Ready to get started? Head over to our Qdrant docs to find all the documentation, tips, and samples.

Happy parsing!

Related articles

Get server-less runtime for agents and data ingestion

Tensorlake is the Agentic Compute Runtime the durable serverless platform that runs Agents at scale.