Parse and Retrieve Dense Tables Accurately with Tensorlake

TL;DR

Tensorlake now preserves the structure of dense, multi-page tables, returning them as DataFrames with bounding boxes and summaries so you can run accurate, explainable lookups tied to the source cells.

Tables power critical decisions from financial statements to clinical trial results and model benchmarks. But retrieving data faithfully from tables remains one of the hardest problems in retrieval.

In this post you will learn:

- Why traditional parsers fail with real-world tables

- Why maintaining table structure and summarizing tables is needed for accurate table querying

- How to parse tables reliably and accurately with Tensorlake

- Which retrieval patterns are most effective for tables

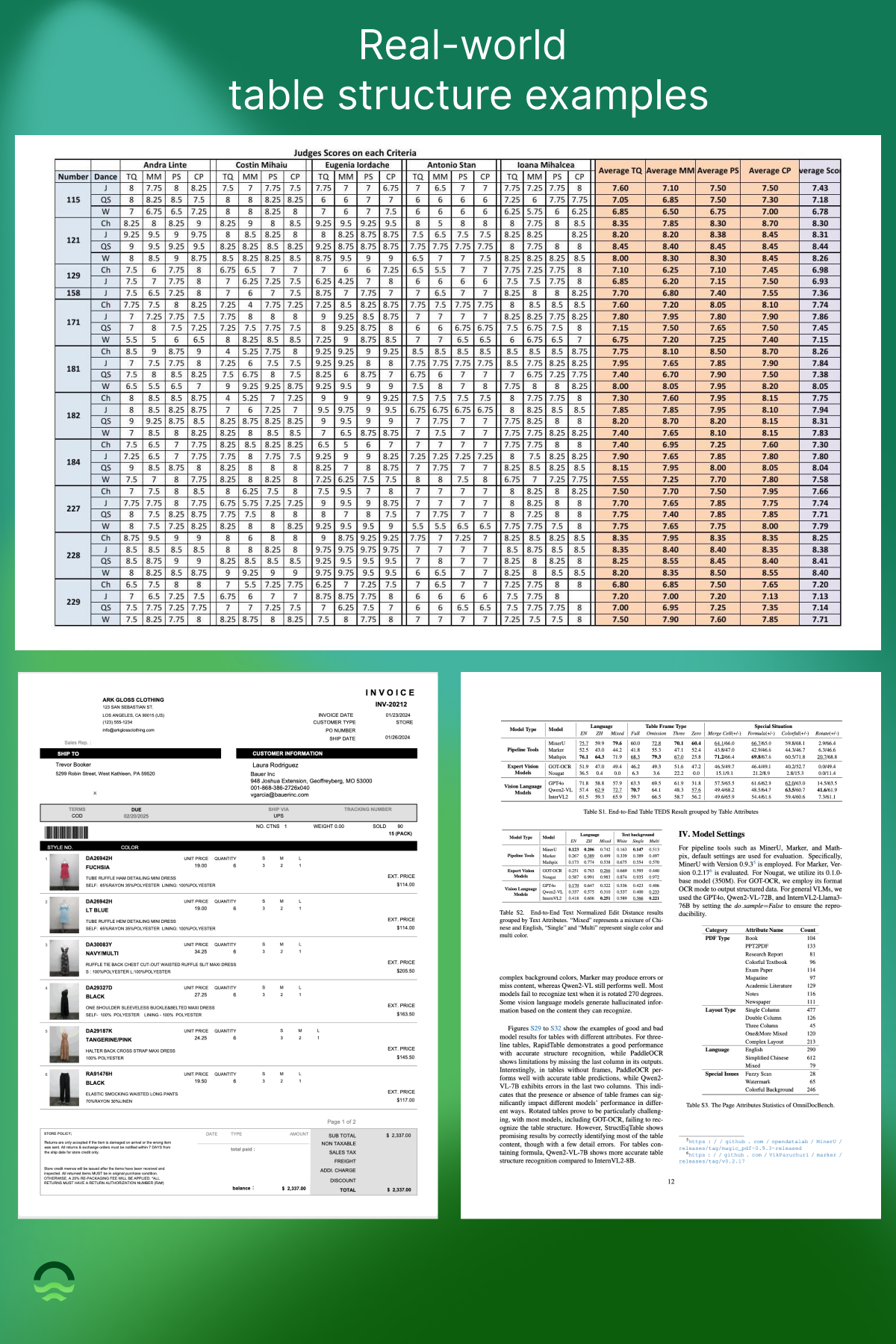

Why Dense Tables Break Traditional Parsers

Dense tables aren’t just inconvenient, hey routinely break popular parsing libraries. Misaligned headers or dropped rows mean the difference between a reliable answer and a misleading one. To understand why this happens, let’s look at the most common failure modes. These are the very challenges Tensorlake was built to solve.

- Layouts are inconsistent: Real documents rarely follow a clean grid. Headers span multiple rows, cells merge, tables appear side by side. Traditional parsers misinterpret these, causing misaligned columns and missing headers.

- Flattening destroys structure: Flattening to text loses header–cell relationships. Retrieval models treat them as unstructured strings, which causes noisy embeddings, and queries can’t map to the right column.

- Multi-page tables break parsers: Long tables span pages, but many parsers work page-by-page. Rows split, headers drop, output fragments.

- Parsing is multi-step: Reliable parsing needs detection (locating tables), structure recognition (rows, headers, merges), and functional analysis (what each cell means). Skip one step, and retrieval suffers.

Each of these issues adds up to the same result: tables lose their meaning once structure is stripped away. Without consistent alignment, multi-page stitching, and metadata, downstream retrieval becomes guesswork. Tensorlake eliminates these failure points by keeping tables intact as structured fragments. Complete with headers, summaries, and page-level evidence, accuracy and trust are preserved end to end.

How Tensorlake Preserves Table Structure

Preserving structure is the foundation

The issue isn’t extracting text, it’s preserving structure. Rows, headers, and alignments give meaning to the table data. Traditional parsers (like pdfplumber, Camelot, Tabula, and PyMuPDF) often flatten tables into plain text, breaking these relationships and making queries unreliable. Tensorlake avoids this by returning tables as structured HTML, where multi-row headers, merged cells, and column mappings remain intact.

Summaries make tables retrievable

Dense tables are dominated by numbers, and raw numbers carry little meaning for embedding models. If you were to create an embedding with just the raw data extracted from the table, for example, a number won't come with the correct context to explain whether it’s representing revenue, population, or weight. To make tables discoverable, Tensorlake generates concise summaries about tables (e.g. captions, key metrics, significance), while still maintaining the full table to be referenceable. Retrieval happens via the summary, and the actual answer comes from the structured table.

Tensorlake applies these principles directly:

- First-class fragments: tables are parsed as one unit, not scattered text.

- Structured output: HTML or Markdown preserves headers, captions, merges.

- Source traceability: page metadata ties results back to the document.

- Automatic summaries: retrieval is efficient while full data remains available.

- Pandas-ready: direct DataFrame integration for numeric operations and filtering.

By combining structure preservation with summaries and reliable metadata, Tensorlake eliminates the fragility of traditional parsing and makes dense tables directly usable and retrievable for question answering.

Hands-on: Parsing Dense Tables in Practice

Let’s walk through a minimal example that shows how to parse a document, preserve table structure, and run precise lookups with Pandas.

Step 1. Install and Set-up Tensorlake

1[.code-block-title]Tensorlake[.code-block-title]pip install tensorlake pandas lxml

2export TENSORLAKE_API_KEY="your_tensorlake_api_key"Step 2. Parse a Document with Table-Aware Options

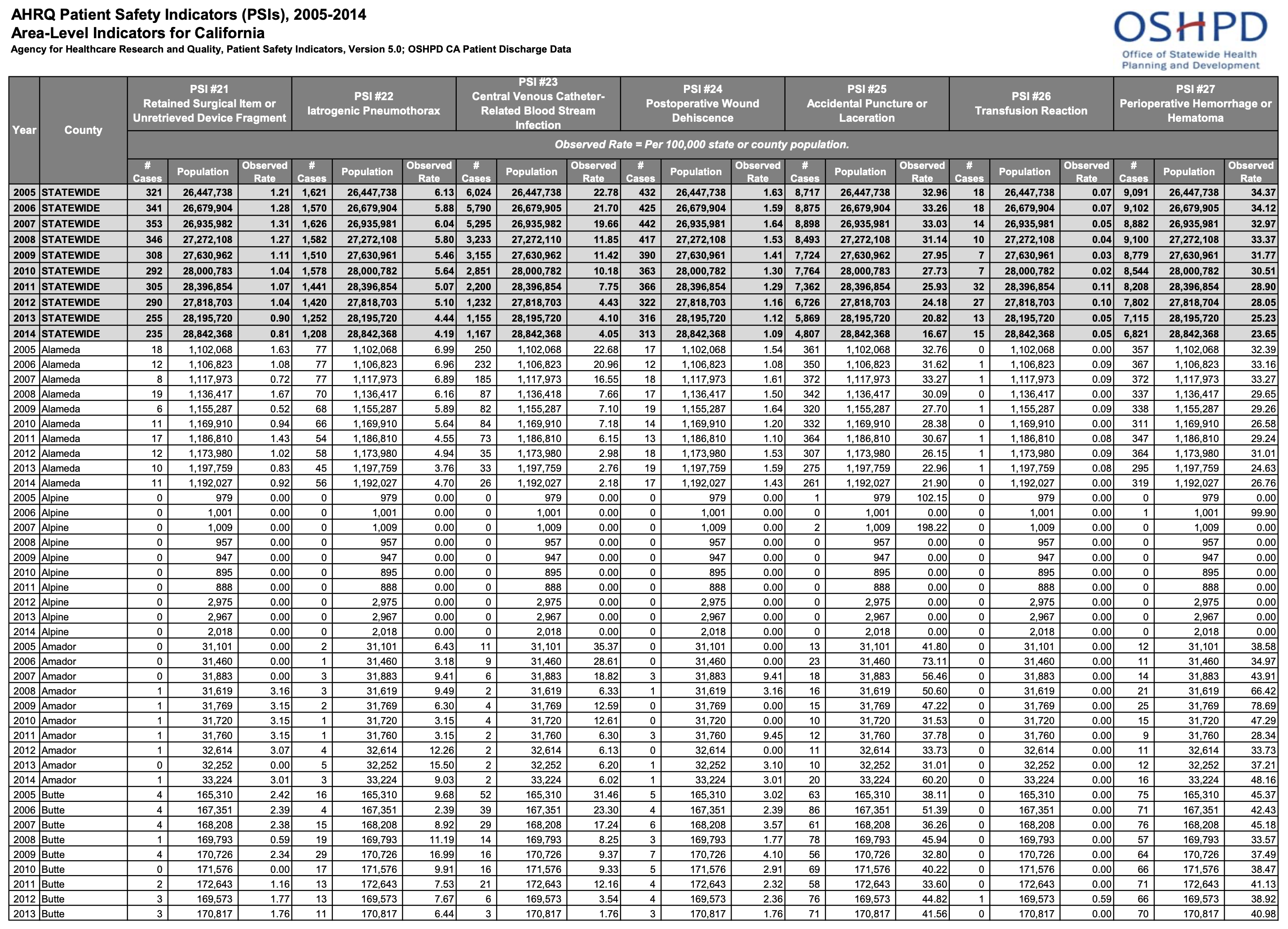

We’ll start with the AHRQ Patient Safety Indicators table from OSHPD (Office of Statewide Health Planning and Development) and see how Tensorlake preserves structure during extraction. This is just one page from a 12-page table (found here).

1[.code-block-title]Code[.code-block-title]from tensorlake.documentai import (

2 DocumentAI, ParsingOptions, ChunkingStrategy,

3 TableOutputMode, ParseStatus, PageFragmentType

4)

5

6doc_ai = DocumentAI()

7

8# Upload a local file (PDF, DOCX, XLSX, HTML, PPT)

9file_id = doc_ai.upload("path/to/document.pdf")

10

11options = ParsingOptions(

12 chunking_strategy=ChunkingStrategy.FRAGMENT, # keep tables intact

13 table_output_mode=TableOutputMode.HTML # preserves structure for Pandas

14)

15

16result = doc_ai.parse_and_wait(file_id, parsing_options=options)Here we tell Tensorlake to return fragments (so tables aren’t split) and keep them in HTML, which retains headers and alignment.

1[.code-block-title]Code[.code-block-title]from bs4 import BeautifulSoup

2

3soup = BeautifulSoup(result.chunks[0].content, "lxml")

4tables = soup.find_all("table")

5dfs = []

6for t in tables:

7 # pandas.read_html can parse a single table string

8 df = pd.read_html(StringIO(str(t)), flavor="lxml")[0].fillna("")

9 display(df)

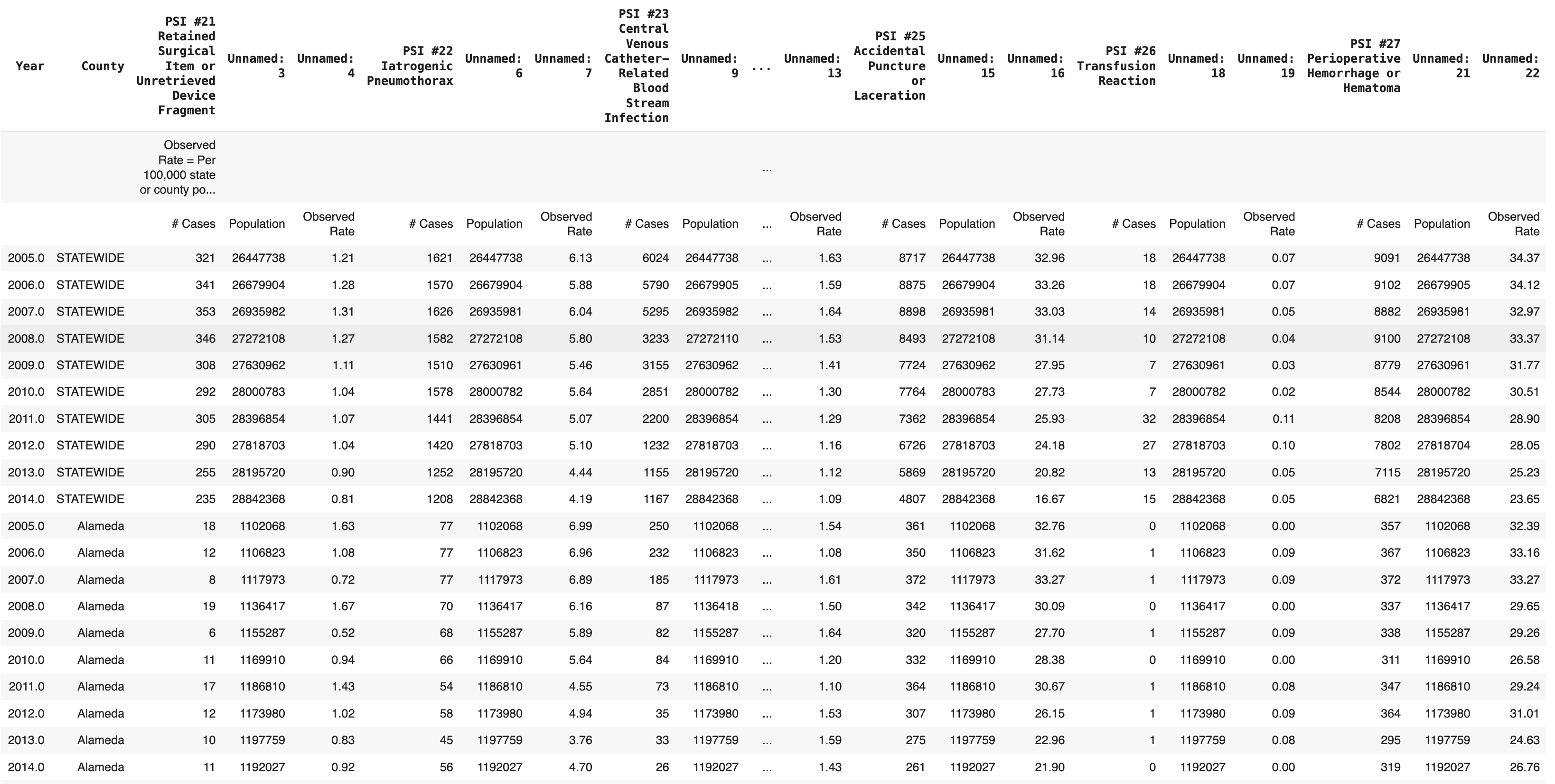

At this point, every table in the document is a DataFrame with rows, columns, and headers preserved. Now, we can query on top of this table using pandas to extract important details or perform some operations on top of it.

Step 3. Extract Tables with Page Numbers and Bounding Boxes

Bounding boxes give you the exact coordinates of a table on the page. This means you can:

- Show evidence: point back to the original page and highlight the table region when returning an answer.

- Render previews: crop just the table area in a viewer, instead of sending someone the whole PDF.

This makes tables citable: you can point to the original cell, highlight it in a viewer, and build trust through evidence.

1[.code-block-title]Code[.code-block-title]def extract_tables_with_locations(result):

2 """

3 Return a list of dicts for each table:

4 { 'page_number': int, 'bbox': <bounding box>, 'df': DataFrame }

5 """

6 out = []

7 pages = result.pages or []

8 for page in pages:

9 for fragment in page.page_fragments or []:

10 if fragment.fragment_type == PageFragmentType.TABLE:

11 html = getattr(getattr(fragment, "content", None), "html", None)

12 if not html:

13 continue

14 df = pd.read_html(StringIO(str(html)), flavor="lxml")[0].fillna("")

15 bbox = getattr(fragment, "bbox", None) or getattr(fragment, "bounding_box", None)

16 out.append({"page_number": page.page_number, "bbox": bbox, "df": df})

17 return out

18

19tables_with_loc = extract_tables_with_locations(result)

20for item in tables_with_loc:

21 pn, bb, df = item["page_number"], item["bbox"], item["df"]

22 print(f"Table on page {pn} at {bb}:")

23 print(df.head())Step 4. Run Accurate Lookups

1[.code-block-title]Code[.code-block-title]def find_rows(df, **equals):

2 view = df.copy()

3 for col, val in equals.items():

4 if col in view.columns:

5 view = view[view[col].astype(str) == str(val)]

6 return view

7

8def top_n_by(df, column, n=5, ascending=False):

9 s = pd.to_numeric(df[column], errors="coerce")

10 ranked = df.assign(_key=s).sort_values("_key", ascending=ascending)

11 return ranked.drop(columns=["_key"]).head(n)

12

13# Example usage

14page, df = tables[0]

15# Find all rows for Los Angeles County

16print(find_rows(df, **{"County": "Los Angeles"}))

17# Top-3 years by number of cases

18print(top_n_by(df, "Cases", n=3, ascending=False))Instead of fuzzy matches, we can filter by column values and sort by numeric fields, producing results that are both accurate and explainable.

Advanced Retrieval Patterns for Tables

Beyond simple parsing, these retrieval patterns make answering questions over tables both accurate and explainable.

Working with dense tables often requires more than just parsing. A few patterns make retrieval and question answering more reliable:

- Summary-first retrieval: Instead of embedding the raw table or searching through captions and nearby text, embed a concise summary of the table. Store the full table as metadata. At query time, retrieve the summary and then load the underlying table to compute the exact answer.

- Keep tables intact: For very large tables, split by rows with header repetition if necessary, but always repeat headers so queries map correctly.

- Retrieval plus compute: Find the right table with Tensorlake, then apply typed filters and aggregations in Pandas or SQL for accurate results.

- Metadata-aware retrieval: Always include page number, table index, caption and bounding box so answers can be traced back to the source.

These patterns ensure retrieval is not just accurate, but explainable; critical when tables drive financial, healthcare, or benchmark decisions.

Reliable Retrieval Starts with Structure

Dense tables are among the hardest document structures. Tensorlake preserves them as structured fragments, making them reliable DataFrames you can query directly.

With just a few lines of code, you can parse documents, extract structured tables, generate summaries, and run accurate lookups backed by page-level evidence. The result: precision with full traceability.

If you work with financial reports, clinical trials, or benchmarks where tables carry the core information, try this workflow in our Playground or in this colab notebook. Tensorlake delivers accurate, explainable results and makes dense table retrieval practical.

Have a table-heavy use case? We'd love to hear about it, join our Slack and we will help you get started.

Related articles

Get server-less runtime for agents and data ingestion

Tensorlake is the Agentic Compute Runtime the durable serverless platform that runs Agents at scale.