Precise Data Extraction: Pattern-Based Partitioning for Structured Extraction

TL;DR

Tensorlake's pattern-based partitioning lets you define patterns to extract data from specific document sections instead of parsing entire documents. This eliminates layout dependencies and reduces noise, delivering consistent extraction results even when document templates change.

Your document extraction pipeline is brittle. Hard-coded page ranges break when layouts shift, full-document parsing burns through tokens on irrelevant content, and template based extraction fails when target data moves between document versions.

Tensorlake's pattern-based extraction solves this within your StructuredExtractionOption workflows. Define start and end patterns to extract only the data sections you need.

No more parsing noise, no more layout dependencies.

The Problem with Traditional Extraction

Document layout variability breaks positional extraction logic. A financial report might place the "Total Assets" section starting on page 3 and going through page 4 in one document and all within page 7 in another. Parsing entire documents wastes compute cycles and introduces noise into structured extraction workflows. Fixed page or section boundaries miss target data that spans inconsistent locations across document sets.

Pattern-based partitioning solves this by decoupling extraction logic from document layout through regex-driven zone targeting.

Pattern-Based Partitioning: Content-Aware Extraction

Pattern-based partitioning delivers precision targeting through regex pattern recognition within your StructuredExtractionOption:

- Precise: Use regex patterns like

\\bAccount\\s+Summary\\bto identify exactly where your target data begins or ends, skipping irrelevant content that clutters extraction results. - Flexible: Define start patterns to begin extraction at specific markers, end patterns to stop at precise terminators, or both to capture data between known markers. Extract only the contract section you need, not the entire 200-page document.

- Multi-Page: Extract data that spans pages by focusing on content patterns rather than arbitrary page boundaries or specific section headers. Perfect for financial summaries, property listings, or contract clauses that don't respect document structure.

- API-Native: Integrate into existing workflows with simple JSON configuration in your parse endpoint calls, no architectural changes required.

Implementation: Four Steps to Pattern-Based Partitioning for Extraction

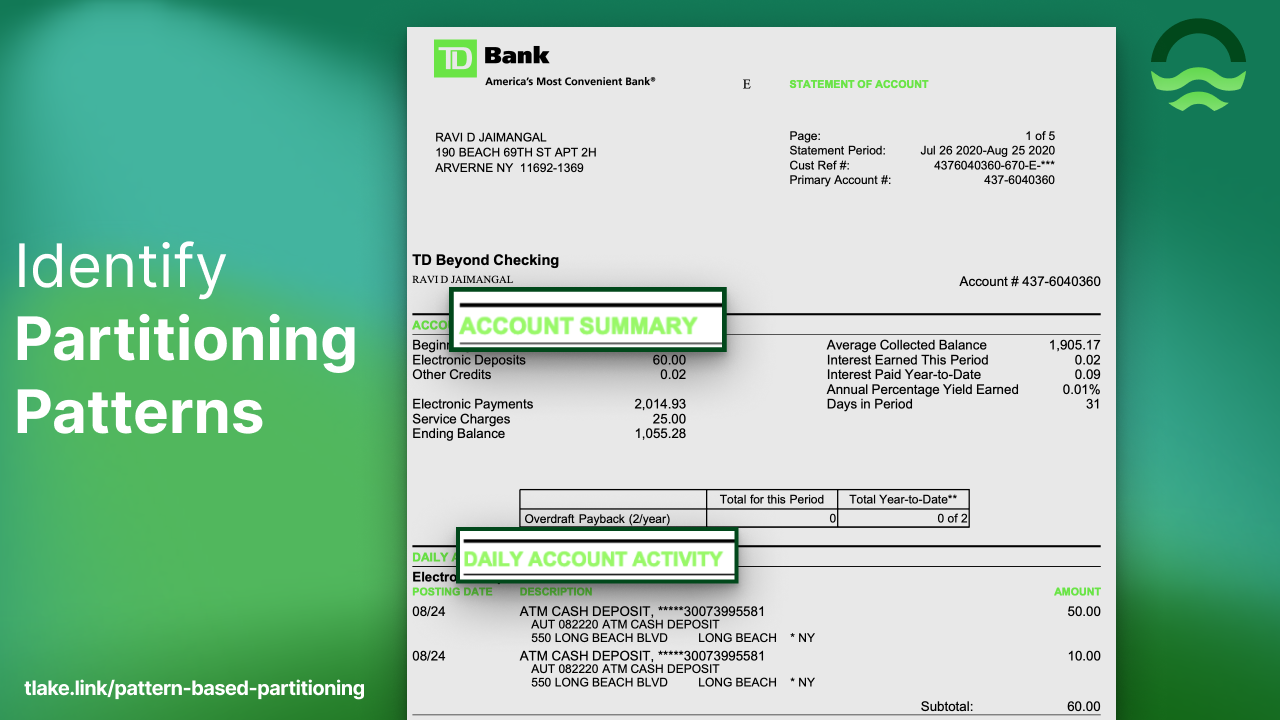

- Identify Your Patterns Analyze your documents to find consistent text markers around target data. Look for headers like "Account Summary", "Grand Total:", or "Section 4.2" that reliably indicate extraction zones.

- Configure Extraction Add pattern configuration to your

StructuredExtractionOption:

[.code-block-title]Code[.code-block-title]{

"partition_strategy": {

"patterns": {

"start_patterns": ["\\bACCOUNT\\s+SUMMARY\\b"],

"end_patterns": ["\\bDAILY\\s+ACCOUNT\\s+ACTIVITY\\b"]

}

}

}- Test & Refine Process sample documents and adjust patterns to capture exactly the data sections you need. Start broad, then narrow your regex patterns for precision.

- Scale Deployment Apply consistent extraction rules across thousands of similar documents with confidence. Pattern-based targeting scales linearly, define once, extract consistently.

Results: Deterministic Extraction with Content-Aware Targeting

Pattern-based partitioning delivers deterministic extraction performance across variable document layouts. Your extraction logic becomes resilient to structural changes. Financial summaries can migrate from page 3 to page 7, contract clauses can span different sections, and your pipeline continues extracting the correct data zones.

The architectural benefit: your extraction logic becomes layout-agnostic. Instead of brittle positional dependencies, you define semantic boundaries that scale across document variations. The result: consistent structured outputs from inconsistent document inputs, with extraction accuracy that doesn't degrade as document templates evolve.

Ready to stop guessing and start targeting your extractions? Try Tensorlake's pattern-based partitioning today:

-> Documentation: Learn more about pattern-based partitioning

-> Colab Notebook: Try the notebook

-> Schedule a call and we’ll help you get going: Book a call now

Related articles

Get server-less runtime for agents and data ingestion

Tensorlake is the Agentic Compute Runtime the durable serverless platform that runs Agents at scale.